Bring On The Big Guns

There have been two constants in the tests so far. The GNU/Atlas library and the fact that we are using TCP based MPI libraries. A quick check finds that there is another BLAS library from from the Texas Advanced Computing Center called GotoBLAS. Good things have been reported about these optimized libraries. Checking the documentation first this time, reveals that these libraries are not supported on our processors.

The other parameter we have not changed is the use of TCP to communicate between nodes. As mentioned, TCP use buffers. When a communication takes place, data is copied to the OS buffers, then across the network in to the other nodes OS buffer, then copied to the user space application. HPC practitioners have known for years that this extra copying slows things down so they developed "kernel bypass" software and hardware to copy data directly from user space to user space. Normally this approach requires some fancy and expensive hardware as well.

Since we cannot buy new hardware, that leaves one option - Ethernet kernel by-pass. Fortunately, such a project exists and will work on our Intel Gigabit Ethernet PCI cards. The project is called GAMMA (Genoa Active Message MAchine ) and is maintained by Giuseppe Ciaccio of Dipartimento di Informatica e Scienze dell'Informazione in Italy. Using kernel by-pass could make a big difference, so it is worth a try.

GAMMA requires a specific kernel (2.6.12) and must be built with some care. The current version of GAMMA takes over the interface card when GAMMA programs are running, but allows standard communication otherwise. In the case of the Kronos cluster, we have a Fast Ethernet administration network to help as well. Of course, Warewulf needed to be configured to use GAMMA. Without too much trouble, Kronos was soon running the GAMMA ping-pong test. The results were as follows:

- Average latency 9.54739 useconds

- Maximum Throughput: 72 MBytes/sec

Previous tests using Netpipe TCP showed a 29 useconds latency and a 66 MBytes/sec throughput. Recall that Kronos is using 32bit/33MHz PCI cards, so the top end bandwidth is going to be limited by the PCI bus. In any case, such numbers were quite astounding for this level of hardware.

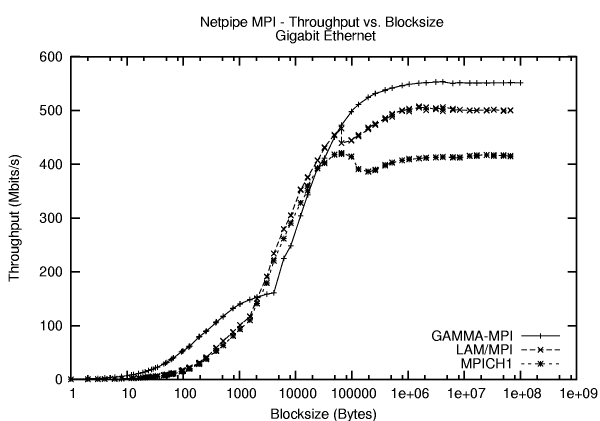

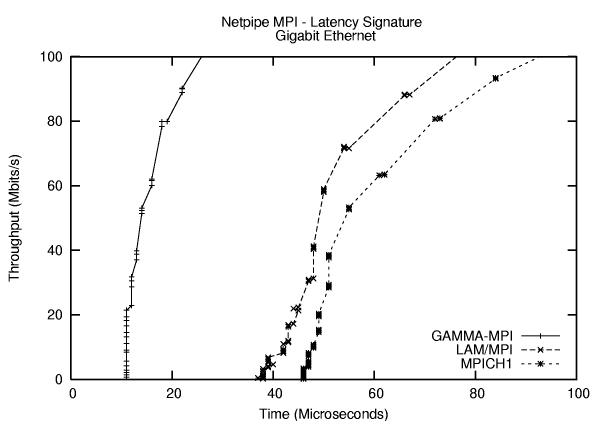

There is MPI support for GAMMA as well. The authors have modified MPICH version 1.1.2 to use the GAMMA API. Before, seeing the effect on HPL, it may be useful to see the difference between GAMMA-MPI, LAM/MPI, and MPICH1. Fortunately, the Netpipe benchmark has an MPI version. We can now level the playing field and see what type of improvements GAMMA can provide. The results are shown in Figure One where throughput vs block size is shown. At the beginning and the end of the graph, GAMMA-MPI is the clear winner, in the middle portion however, the other MPIs have an advantage of GAMMA-MPI. Figure Two and Table One show the difference in small packet latency for the various MPIs. In this case GAMMA-MPI is the clear winner. Another thing to notice that the TCP latency was previously found to be 29 useconds and adding an MPI layer increases this to over 40 useconds. As is often the case, adding an abstraction layer adds overhead. In this case, the portability of MPI is often a welcome trade-off for the increase in latency.

Table One: NETPIPE Latency Results

MPICH48

| MPI Version | Latency |

|---|---|

| LAM | 41 |

| GAMMA | 11 |

In all fairness, each MPI can be tuned somewhat to help with various regions of the curve. In addition, there are other implementation details of each MPI library that come into play when a real code is used. (i.e. the results in Iand Iare not the sole predictor of MPI performance).

Armed with the GAMMA results a new version of HPL was built and executed on the cluster. There was a problem with memory space, however. When GAMMA is running on the the cluster the amount of free memory was decreased by 20%. Some adjustments got this number down to 10%, but the HPL problem size needed to be reduced. The reason GAMMA needs memory is due to the fact that it needs to reserve memory for each connection it creates. In order to work as fast as possible, GAMMA needs to reserve memory space. So the cost for speed is memory. In the case of HPL, the problem size is smaller and thus the less GFLOPS are possible. None the less, it was possible to run a problem size of 11650 successfully. This run resulted in a 14.33 GFLOPS and was no where near a new record. To see the real effect both LAM/MPI and MPICH1 were run using this problem size to see how the MPI-GAMMA helped performance. At this problem size MPICH1 returned 13.66 GFLOPS and LAM/MPI returned 14.21 GFLOPS. It seems we may have hit a wall. Even if we could get GAMMA-MPI running the the previous problem size, the improvement is not expected to be that great.

The Wall

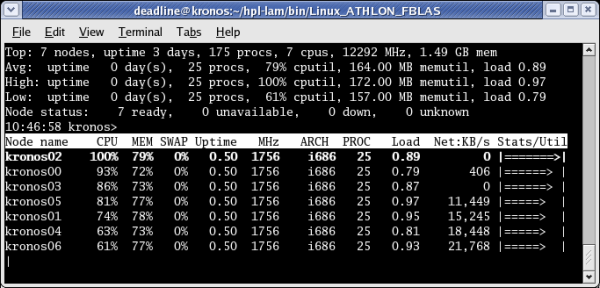

A summary of our tests is given in Table Two. After all the efforts, the best Kronos could do was 14.90 GFLOPS. I believe with some tuning tweaking and twitching I could break 15 GFLOPS. Will I try to break the current record? Probably not. The time I would need to invest to get another 0.1 GFLOPS would probably be 2-3 days. My judgment is we have hit "good enough" for this application on this cluster. Another indication that we are hitting the maximum for the system is shown in Figure Three. In this figure, the output of I is shown. You can clearly see that those processors that are not communicating are calculating at close to 100 % and those that are communication are high as well. Note: wwtop is a cluster like top application that shows the processor, memory, and network load on the cluster. The head node, which was used in the calculations is not shown, but is assumed to have similar data.

Table One: Benchmark Results

(PG = Portland Group Compilers, + buffers = increase TCP Buffer range, see text for further details)

Was It Worth It?

Although the exercise really did not set a new record worth shouting about, it did teach a few things about the cluster and application. First, our previous efforts, which required far less time produced great results. Second, swapping MPIs and compliers had very little effect which means that any bottlenecks probably do not reside in these areas. And finally, there are always trade-offs on the road to "good enough."

If I were to guess where more performance might be had I would say the Atlas library. A hand tuned assembler library would might work faster, but clearly is not worth the effort. As the benchmark code was fixed and the library code was optimized, there may not have been much value in profiling the code using something like PAPI (Performance Application Programming Interface), but such assumptions are often worth testing. If I ran an HPL type application day in and day out, I might be inclined to pursue these efforts further, but this is not the case. There are far more interesting applications to get working on Kronos than the HPL benchmark.

A final bit of advice. Keep an eye on the big picture as well. The amount of time spent optimizing a $2,500 cluster might lead one ask, "Why not just by faster hardware?" Which, is an utterly excellent point. Admittedly seeing how far one can push $2,500 worth of computing hardware is an interesting project. If one factors in the cost, "good enough" is often just enough to be perfect.

| Test | MPI | COMPILER | Lib | TCP | Size | GFLOPS |

| 1 | LAM/MPI | GNU | Atlas | default | 12,300 | 14.53 |

| 2 | LAM/MPI | GNU | Atlas | default+buffers | 12,300 | 14.57 |

| 3 | MPICH1 | GNU | Atlas | default+buffers | 12,300 | 13.90 |

| 4 | MPICH1 | PG | Atlas | default+buffers | 12,300 | 13.92 |

| 5 | LAM/MPI | PG | Atlas | default+buffers | 12,300 | 14,60 |

| 6 | MPICH1 | GNU | Atlas | default+buffers | 11,650 | 13.66 |

| 7 | LAM/MPI | GNU | Atlas | default+buffers | 11,650 | 14.21 |

| 8 | MPICH-GAMMA | GNU | Atlas | NA | 11,650 | 14.33 |

| Sidebar Resources |

|

Warewulf - Cluster Toolkit AMD - Thanks for the hardware! |

This article was originally published in Linux Magazine. It has been updated and formatted for the web. If you want to read more about HPC clusters and Linux you may wish to visit Linux Magazine.

Thanks to Jeff Layton for comverting this to HTML.

Douglas Eadline is editor of ClusterMonkey.