Can Doug break the record he and Jeff set previously? Can he overcome the chains of reality? Inquiring minds want to know?

Cluster optimization is often considered an art form. In some cases it is less art and more like flipping a coin. A previous article described the Kronos value cluster and its record breaking price-to-performance results. Can an investment of time and effort break this record or have we hit the cluster wall?

Introduction

If you followed along with our previous value cluster articles, you will remember that Jeff Layton and I built and optimized a cluster for under $2500. Our results for the cluster set a record of sorts for achieving 14.53 GFLOPS (52% of peak) at a cost of $171/GFLOP (double precision). We used the famous Top500 benchmark called HPL (High Performance Linpack). Not one to rest on my laurels, I decided to push on and see if I could improve on our HPL performance.

The next step in improving performance is to figure out what to optimize. There are plenty of things to change, so where to start is a good question. When fixing cars, my father always had a rule. I, then move to next easiest/cheapest part until you (hopefully) fixed the problem. It is easier and cheaper to replace a gas filter than it is to replace a carburetor. Our efforts thus far, have taken a similar approach where we tweaked the program parameters, choose the best BLAS (Basic Linear Algebra Subprograms) library, and tuned the Ethernet driver. In this article, I would like to find the "good enough wall" for the cluster, which is basically the point of diminishing returns.

Also remember that the source code can not be changed so we are limited to program parameters, the cluster plumbing (interconnects, drivers), and middle-ware (MPI)and compiler tools. Those who have experience in this area will obviously raise there hand and say, "What about other compilers and MPI versions?" My response is, "Well of course, it seems easy enough -- which is a sure indication that probably will not be."

If we are to try other compilers and MPI version, we need to keep in mind that there is quite a list of alternatives (see Sidebar Two). In order to get done testing within this decade, we will limit our choices to some of the more popular packages. The choices should not be taken a slight toward other alternatives. There are plenty of both open and commercial tools available and your choice should be based on your needs.

| Sidebar One - The Current Kronos Record |

|

As reported in the November 2005 issue, the current record for our value cluster (called Kronos) is 14.53 GFLOPS. This result was achieved using a cluster composed of eight AMD Sempron 2500 processors with 256 MBytes of PC27000 RAM (512 MBytes on the head node), and a Gigabit Ethernet link. We tuned the program parameters, tried different BLAS libraries, and tuned the Gigabit Ethernet Links (6000 Byte MTU, turned off interrupt mitigation strategies) We are also using the Warewulf cluster toolkit (See Resources). For this article we will use the same hardware and only change software. |

Another important note is worth mentioning. As I try and push the GFLOPS higher some things may work for this application and some things may not. It would be a mistake to assume this is the case for all applications - particularly yours. Please keep this proviso in mind when in interpreting the results.

We are going to look at tuning the TCP values, changing the MPI library, changing the compiler, and finally using a kernel by-pass MPI library. As we cannot try every possible combination (see Sidebar Two), at least we will go through the exercise of changing these parameters and at minimum learn a few things about how to turn your hair gray (in my case more gray).

A Nudge, Not a Bump

The easiest thing to try is to tune the TCP parameters. I am borrowing from a Berkley Lab TCP Tuning Guide I found on-line (see Resources Sidebar) Listing One shows the settings that were added to the /etc/sysctl.conf file. You can effect the changes by simply running a sysctl -p. For those interested, all TCP implementations uses buffers for the transfers between nodes. In newer Linux kernels, the kernel will auto-tune the buffer size based on the communication pattern it encounters. Our changes will be to increase the maximum tunable size of the buffers.

Re-running our best case from before with the new setting shows the smallest of increase to 14.57 GFLOPS (multiple runs confirmed that this increase is statistically significant). The change was easy, not much improvement and no huge amount of time lost.

Listing One - New TCP Parameters

# increase TCP max buffer size net.core.rmem_max = 16777216 net.core.wmem_max = 16777216 # increase Linux autotuning TCP buffer limits # min, default, and max number of bytes to use net.ipv4.tcp_rmem = 4096 87380 16777216 net.ipv4.tcp_wmem = 4096 65536 16777216

One thing to remember is that there is no best compiler/MPI combination for every program! Of course you are you hoping for the best, but you may never know if you are getting the best performance -- until some uber geek on a mailing list let's you in on the secret combination that got his application 10% more performance than yours.

Finally, there are those pesky optimization flags that vary from compiler to compiler and processor to processor. Indeed, the trick is to know when "good enough" is really good enough. If you know your application, in most cases, you will have a "feel" for this point in the optimization process.

Pick an MPI

Up until this point we have been using LAM/MPI. This MPI is the default MPI used in the Warewulf distribution. The version we used was 7.0.6. The most logical MPI to use next is MPICH from Argonne National Lab. The latest version of MPICH is MPICH2. With much anticipation, I compiled MPICH2 and set about running the HPL code.

| Sidebar Two - Too Much of a Good Thing |

|

If you are looking for the best performance then you know that compilers and libraries are a great way to "easily" make changes. Unfortunately, "the devil, they say is in the linker". Furthermore, the array of choices is rather daunting. Let's first consider compilers. A short list of Linux x86 compilers include GNU, Portland Group, Intel, Pathscale, Lahey, Absoft, and NAG. Similarly a short list of MPI implementations would include; LAM/MPI, MPICH1, MPICH2, Intel-MPI, MPI/Pro, WMPI, Open-MPI, and Scali MPI Connect. Not to mention all the variation for different interconnects within each MPI. So, lets do the math for the short list. Seven compilers times seven MPIs, that is 49 possible combinations. Considering that building and running your application with each combination may range from easy to aggravating and may take a non-trivial amount of time, it is no wonder that perfect is often considered the worst enemy of good enough. |

The first problem I encountered was the need for some shared libraries to be on the nodes. Recall that a Warewulf cluster uses a minimal RAM disk on each node. I added the libraries to the cluster VNFS (Virtual Network File Systems), built a new node RAM disk image and rebooted the nodes in all of 5 minutes. Continuing, I also recalled that MPICH2, similar to LAM, now uses daemons (instead of rsh or ssh) to start remote jobs. To my surprise, I found that the daemons needed a version of Python on each node to run. While, Python and other such languages are great tools, I prefer the "less is better" approach with clusters, which is pretty much the Warewulf approach as well. Requiring Python on each node seems to me to be a move in the wrong direction. In any case, since getting the daemons working under Warewulf will take some time and testing, I decided to take a step back and use the old reliable MPICH1.

After a quick, configure; make install I had MPICH1 running on the cluster. The test programs worked, so it was time to compile HPL and see if we can increase our score. Of course, some fiddling with environment variables and the HPL Makefile was needed to ensure the right libraries were used. Running the benchmark resulting in 13.9 GFLOPS. This result was good, but of course not our best. For the MPI jihadists out there, this result does not necessarily mean LAM is always better than MPICH, for this code it might be, but I have seen other codes where MPICH beats LAM as well. After looking at the MPICH1 results, it seemed that trying OpenMPI might be worthwhile. OpenMPI is a new highly modular MPI that is being written by the LAM/MPI the FT-MPI, LA-MPI, and PACX-MPI teams. The final release is imminent, so it seemed like it might be helpful to have another data point. After down loading and building, the test programs worked, so running HPL was next. The program started, but basically stalled out. After talking with one of the authors, I learn that these are known issues. Sometimes I think my goal in life is to validate known issues. Time to move on. The compiler is next.

Pick A Compiler and an MPI

The compiler is one of those really cool tools that at times can give your application a really nice kick in the asymptote. Again, it all depends on your application, but some of the commercial compilers have a few more hardware specific options than the GNU compilers, so they are worth a try. Presently, most of the commercial compilers are focused on x86-64 level processors and have no great interest in optimizing for Sempron processors. For this project, The Portland Group (PG) compiler was chosen because it has been reliable and robust in the past. The 15 day free trail helped as well. In any case, it is just a recompile, right?

There are three basic components in the HPL program; the program itself, the BLAS library, and the MPI library. The cleanest way to build an application with a new compiler is to build all the supporting libraries as well. Otherwise, you may end up scratching your head as a multitude of linking problems pass in front of your eyes. Building MPIs with alternate compilers has been well documented, so the task now looked to be as follows:

- Build a new version of MPI with PG

- Build a new version Atlas with PG

- Build a new version of HPL with PG linking the components in 1 and 2make. No joy. There is an error message from the Atlas make procedure about an undefined case. Fair enough. Time to check the HPL documentation. It seems they don't recommend the PG compiler to build Atlas. That would be two known issues I have successfully validated thus far.

Moving on, the PG compiler is very good at linking in GNU compiled code, so I'll just use the GNU Atlas libraries and build HPL with PG. After some makefile magic I have a new HPL binary compiled with some cool optimization flags (-fastsse -pc=64 -O2 -tp athlonxp). The code is run and the the GFLOPS hit 13.92. Some further fiddling with compiler options, does not really change things. At this point, the MPICH1-PG version is slightly better than the MPICH1-GNU version, but worse that the LAM/MPI-GNU version. Undaunted, the next thing to try is a LAM/MPI-PG combination. After some more I, the code is running and low an behold, a new record of 14.90 GFLOPS, but no fanfare. The amount of time spent with the MPI/compiler rebuilds was easily two days. The amount of improvement is 0.33 GFLOPS. A new tact is needed.

Bring On The Big Guns

There have been two constants in the tests so far. The GNU/Atlas library and the fact that we are using TCP based MPI libraries. A quick check finds that there is another BLAS library from from the Texas Advanced Computing Center called GotoBLAS. Good things have been reported about these optimized libraries. Checking the documentation first this time, reveals that these libraries are not supported on our processors.

The other parameter we have not changed is the use of TCP to communicate between nodes. As mentioned, TCP use buffers. When a communication takes place, data is copied to the OS buffers, then across the network in to the other nodes OS buffer, then copied to the user space application. HPC practitioners have known for years that this extra copying slows things down so they developed "kernel bypass" software and hardware to copy data directly from user space to user space. Normally this approach requires some fancy and expensive hardware as well.

Since we cannot buy new hardware, that leaves one option - Ethernet kernel by-pass. Fortunately, such a project exists and will work on our Intel Gigabit Ethernet PCI cards. The project is called GAMMA (Genoa Active Message MAchine ) and is maintained by Giuseppe Ciaccio of Dipartimento di Informatica e Scienze dell'Informazione in Italy. Using kernel by-pass could make a big difference, so it is worth a try.

GAMMA requires a specific kernel (2.6.12) and must be built with some care. The current version of GAMMA takes over the interface card when GAMMA programs are running, but allows standard communication otherwise. In the case of the Kronos cluster, we have a Fast Ethernet administration network to help as well. Of course, Warewulf needed to be configured to use GAMMA. Without too much trouble, Kronos was soon running the GAMMA ping-pong test. The results were as follows:

- Average latency 9.54739 useconds

- Maximum Throughput: 72 MBytes/sec

Previous tests using Netpipe TCP showed a 29 useconds latency and a 66 MBytes/sec throughput. Recall that Kronos is using 32bit/33MHz PCI cards, so the top end bandwidth is going to be limited by the PCI bus. In any case, such numbers were quite astounding for this level of hardware.

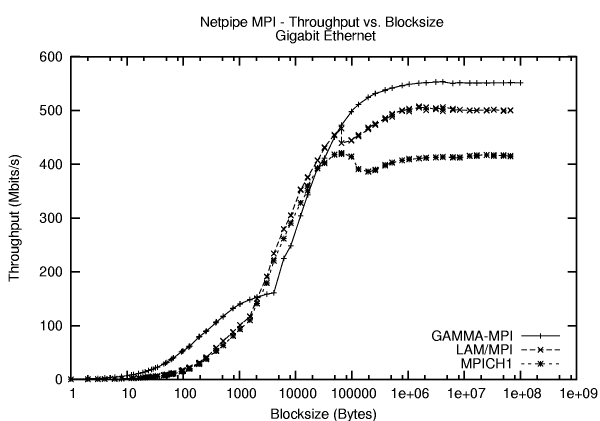

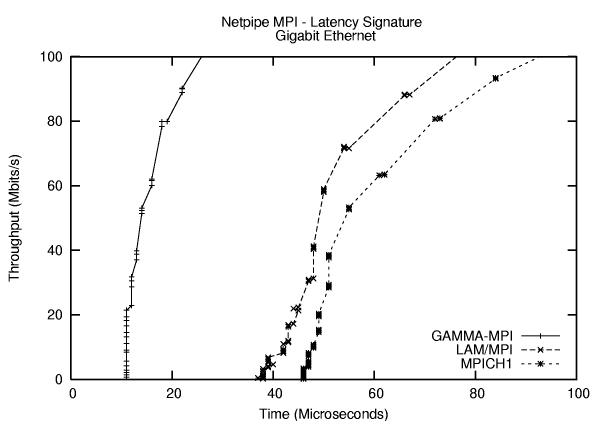

There is MPI support for GAMMA as well. The authors have modified MPICH version 1.1.2 to use the GAMMA API. Before, seeing the effect on HPL, it may be useful to see the difference between GAMMA-MPI, LAM/MPI, and MPICH1. Fortunately, the Netpipe benchmark has an MPI version. We can now level the playing field and see what type of improvements GAMMA can provide. The results are shown in Figure One where throughput vs block size is shown. At the beginning and the end of the graph, GAMMA-MPI is the clear winner, in the middle portion however, the other MPIs have an advantage of GAMMA-MPI. Figure Two and Table One show the difference in small packet latency for the various MPIs. In this case GAMMA-MPI is the clear winner. Another thing to notice that the TCP latency was previously found to be 29 useconds and adding an MPI layer increases this to over 40 useconds. As is often the case, adding an abstraction layer adds overhead. In this case, the portability of MPI is often a welcome trade-off for the increase in latency.

Table One: NETPIPE Latency Results

MPICH48

| MPI Version | Latency |

|---|---|

| LAM | 41 |

| GAMMA | 11 |

In all fairness, each MPI can be tuned somewhat to help with various regions of the curve. In addition, there are other implementation details of each MPI library that come into play when a real code is used. (i.e. the results in Iand Iare not the sole predictor of MPI performance).

Armed with the GAMMA results a new version of HPL was built and executed on the cluster. There was a problem with memory space, however. When GAMMA is running on the the cluster the amount of free memory was decreased by 20%. Some adjustments got this number down to 10%, but the HPL problem size needed to be reduced. The reason GAMMA needs memory is due to the fact that it needs to reserve memory for each connection it creates. In order to work as fast as possible, GAMMA needs to reserve memory space. So the cost for speed is memory. In the case of HPL, the problem size is smaller and thus the less GFLOPS are possible. None the less, it was possible to run a problem size of 11650 successfully. This run resulted in a 14.33 GFLOPS and was no where near a new record. To see the real effect both LAM/MPI and MPICH1 were run using this problem size to see how the MPI-GAMMA helped performance. At this problem size MPICH1 returned 13.66 GFLOPS and LAM/MPI returned 14.21 GFLOPS. It seems we may have hit a wall. Even if we could get GAMMA-MPI running the the previous problem size, the improvement is not expected to be that great.

The Wall

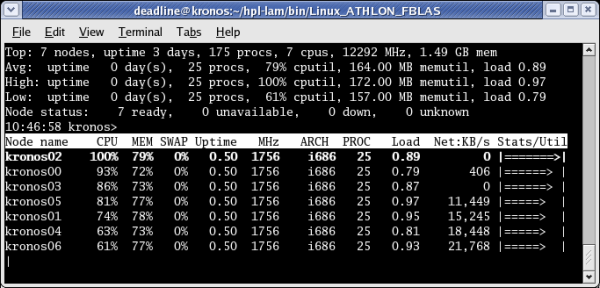

A summary of our tests is given in Table Two. After all the efforts, the best Kronos could do was 14.90 GFLOPS. I believe with some tuning tweaking and twitching I could break 15 GFLOPS. Will I try to break the current record? Probably not. The time I would need to invest to get another 0.1 GFLOPS would probably be 2-3 days. My judgment is we have hit "good enough" for this application on this cluster. Another indication that we are hitting the maximum for the system is shown in Figure Three. In this figure, the output of I is shown. You can clearly see that those processors that are not communicating are calculating at close to 100 % and those that are communication are high as well. Note: wwtop is a cluster like top application that shows the processor, memory, and network load on the cluster. The head node, which was used in the calculations is not shown, but is assumed to have similar data.

Table One: Benchmark Results

(PG = Portland Group Compilers, + buffers = increase TCP Buffer range, see text for further details)

Was It Worth It?

Although the exercise really did not set a new record worth shouting about, it did teach a few things about the cluster and application. First, our previous efforts, which required far less time produced great results. Second, swapping MPIs and compliers had very little effect which means that any bottlenecks probably do not reside in these areas. And finally, there are always trade-offs on the road to "good enough."

If I were to guess where more performance might be had I would say the Atlas library. A hand tuned assembler library would might work faster, but clearly is not worth the effort. As the benchmark code was fixed and the library code was optimized, there may not have been much value in profiling the code using something like PAPI (Performance Application Programming Interface), but such assumptions are often worth testing. If I ran an HPL type application day in and day out, I might be inclined to pursue these efforts further, but this is not the case. There are far more interesting applications to get working on Kronos than the HPL benchmark.

A final bit of advice. Keep an eye on the big picture as well. The amount of time spent optimizing a $2,500 cluster might lead one ask, "Why not just by faster hardware?" Which, is an utterly excellent point. Admittedly seeing how far one can push $2,500 worth of computing hardware is an interesting project. If one factors in the cost, "good enough" is often just enough to be perfect.

| Test | MPI | COMPILER | Lib | TCP | Size | GFLOPS |

| 1 | LAM/MPI | GNU | Atlas | default | 12,300 | 14.53 |

| 2 | LAM/MPI | GNU | Atlas | default+buffers | 12,300 | 14.57 |

| 3 | MPICH1 | GNU | Atlas | default+buffers | 12,300 | 13.90 |

| 4 | MPICH1 | PG | Atlas | default+buffers | 12,300 | 13.92 |

| 5 | LAM/MPI | PG | Atlas | default+buffers | 12,300 | 14,60 |

| 6 | MPICH1 | GNU | Atlas | default+buffers | 11,650 | 13.66 |

| 7 | LAM/MPI | GNU | Atlas | default+buffers | 11,650 | 14.21 |

| 8 | MPICH-GAMMA | GNU | Atlas | NA | 11,650 | 14.33 |

| Sidebar Resources |

|

Warewulf - Cluster Toolkit AMD - Thanks for the hardware! |

This article was originally published in Linux Magazine. It has been updated and formatted for the web. If you want to read more about HPC clusters and Linux you may wish to visit Linux Magazine.

Thanks to Jeff Layton for comverting this to HTML.

Douglas Eadline is editor of ClusterMonkey.