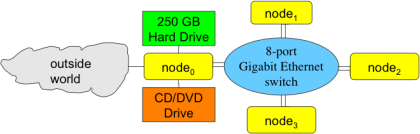

We wanted Microwulf to be able to access the outside world (e.g., to download software updates and the like), so we decided to configure the bottom motherboard and CPU as a head node for the cluster. To do so, we attached the 250GB hard drive to that board, and configured it to boot from that drive. We did the same for the CD/DVD drive shown in Figure One, to simplify software installation, data backup, and so on.

Since our campus network uses Fast Ethernet, we added a 10/100 NIC to the head node's PCI slot, to allow the head node to communicate with the outside world.

For simplicity, we made the top three three nodes diskless, and used NFS to export the space on the 250 GB drive to them. Figure Four below shows the logical layout of Microwulf:

Note that each node has two separate lines running from itself to the network switch.

With four CPUs blowing hot air into such a small volume, we thought we should keep the air moving through Microwulf. To accomplish this, we decided to purchase four Zalman 120mm case fans ($8 each) and grills ($1.50 each). Using scavenged twist-ties, we mounted two fans -- one for intake and one for exhaust -- on opposing sides of each pair of facing motherboards. This keeps air moving across the boards and NICs; Figure Five shows the two exhaust fans:

So far, this arrangement has worked very well: under load, the on-board temperature sensors report temperatures about 4 degrees above room temperature.

Last, we grounded each component (motherboards, hard drive, etc.) by wiring them to one of the power supplies.

As you can tell from some of our decisions, we had to make several tradeoffs to stay within our budget. Here's our final hardware listing with the prices we paid in January 2007.

| Component | Product | Unit Price | Quantity | Total |

| Motherboard | MSI K9N6PGM-F MicroATX | $80.00 | 4 | $320.00 |

| CPU | AMD Athlon 64 X2 3800+ AM2 CPU | $165.00 | 4 | $660.00 |

| Main Memory | Kingston DDR2-667 1GByte RAM | $124.00 | 8 | $992.00 |

| Power Supply | Echo Star 325W MicroATX Power Supply | $19.00 | 4 | $76.00 |

| Network Adaptor | Intel PRO/1000 PT PCI-Express NIC (node-to-switch) | $41.00 | 4 | $164.00 |

| Network Adaptor | Intel PRO/100 S PCI NIC (master-to-world) | $15.00 | 1 | $15.00 |

| Switch | Trendware TEG-S80TXE 8-port Gigabit Ethernet Switch | $75.00 | 1 | $75.00 |

| Hard drive | Seagate 7200 250GB SATA hard drive | $92.00 | 1 | $92.00 |

| DVD/CD drive | Liteon SHD-16S1S 16X | $19.00 | 1 | $19.00 |

| Cooling | Zalman ZM-F3 120mm Case Fans | $8.00 | 4 | $32.00 |

| Fan protective grills | Generic NET12 Fan Grill (120mm) | $1.50 + shipping |

4 | $10.00 |

| Support Hardware | 36" x 0.25" threaded rods | $1.68 | 3 | $5.00 |

| Fastener Hardware | Lots of 0.25" nuts and washers | $10.00 | ||

| Case/shell | 12" x 11" polycarbonate pieces (scraps from our Physical Plant) |

$0.00 | 4 | $0.00 |

| Total | $2,470.00 | |||

Non-Essentials

| Component | Product | Unit Price | Quantity | Total |

| KVM Switch | Linkskey LKV-S04ASK | $50.00 | 1 | $50.00 |

| Total | $50.00 | |||

Aside from the support and fastener hardware (purchased at Lowes), the fan

grills and the KVM switch (purchased at newegg.com),

all of these components were purchased through:

N F P Enterprises

1456 10 Mile Rd NE

Comstock Park, MI 49321-9666

(616) 887-7385

So we were able to keep the price for the whole system to just under $2,500. That's 8 cores with 8 GB of memory and 8 GigE NICs for under $2,500, or about $308.75 per core.

The Devil is in the Details

In the previous section, I talked mostly about a hardware list of what we used in building Microwulf. However, there were a number of small 'gotchas' that we encountered that we think should be shared. Hopefully by sharing them other people won't have to spend too much time recreating the solutions.

One of the first things we really thought about and actually spent some time thinking out is what Linux distribution we would use. It's funny how something like a Linux distribution will create anguish when making a decision or spark such debate. We don't see people facing such anguish over Windows (2000?, XP?, Vista?, Oh My!) or any of the BSD flavors, but you definitely will see a great deal of (heated) debate for all OS's.

For various reasons, we have been a Gentoo shop for a while. So it would seem fairly obvious that we would use Gentoo. But over time we had found Gentoo to be something of an administrative hassle. Since we wanted to keep Microwulf relatively simple (as simple as possible but not overly simple). and we had experience with Ubuntu, we decided to give that a try first.

We installed Ubuntu Desktop on the head node. We used Ubuntu 6.10 (Edgy) as it was the latest stable release of Ubuntu at the time. It had a 2.6.17 kernel. It was a very easy installation except for one thing - the driver for the on-board NIC was not included in the kernel until 2.6.18. So for the first two months of Microwulf's young life, we used an alpha version of Ubuntu 7.04 (Fiesty) with had a 2.6.20 kernel and the drivers that we needed. 7.04 finally went stable in April, but we never had any trouble with the alpha version.

We installed Ubuntu Server on each of the top 3 "compute" nodes, since it did not carry all of the overhead of Ubuntu Desktop.

We also looked at several cluster management packages: ROCKS, Oscar, and Warewulf. Since our nodes, with the exception of the head node, are diskless, that ruled out ROCKS and Oscar, since, as far as we could tell, they didn't support diskless nodes. Warewulf looks like it would have worked well, but we didn't see that it would give us anything extra considering that this cluster is so small. So, we decided to go with an NFSroot approach to Microwulf.

Before we actually did the NFSroot for the "compute" nodes, we considered the idea of using iSCSI instead of NFS, mainly based on a paper we found. But we decided that it was more important to get the cluster up and running, and Ubuntu makes NFSroot really easy. It's as simple as editing the /etc/initramfs.conf file to generate an initial ramdisk that does NFSroot and then setting up DHCP/TFTP/PXELinux on the head node, as you would for any diskless boot situation.

We did configure the network adaptors differently: we gave each onboard NIC an address on a 192.168.2.x subnet, and gave each PCI-e NIC an address on a 192.168.3.x subnet. Then we routed the NFS traffic over the 192.168.2.x subnet, to try to separate "administrative" traffic from computational traffic. It turns out that OpenMPI will use both network interfaces (see below), so this served to spread communication across both NICs.

One of the problems we encountered is that the on-board NICs (Nvidia) present soem difficulties. After our record setting run (see the next section) we started to have trouble with the on-board NIC. After a little googling, we added the following option to the forcedeth module options:

forcedeth max_interrupt_work=35

The problem got better, but didn't go away. Originally we had the onboard Nvidia GigE adaptor mounting the storage. Unfortunately, when the Nvidia adaptor started to act up, it reset itself, killing the NFS mount and hanging the "compute" nodes. We're still working on fully resolving this problem, but it hasn't kept us from benchmarking Microwulf.