[Note: If you are interested in desk-side/small scale supercomputing, check out the Limulus Project and the Limulus Case]

In January 2007, two of us (professor Joel Adams and student Tim Brom) decided to build a personal, portable Beowulf cluster. Like a personal computer, the cost had to be low -- our budget was $2500 -- and its size had to be small enough to sit on a person's desk. Joel and Tim named their system Microwulf, which has broken the $100/GFLOP barrier for double precision, and is remarkably efficient by several measures. You may also want to take a look at the Value Cluster project for more information on $2500 clusters.

We wanted to mention that Distinguished Cluster Monkey Jeff Layton helped us write this article. In order to provide more than just a recipe, we also provide a discussion of the motivation, design, and technologies that have gone into Microwulf. a an aid to those that want to follow us, we also talk about some of the problems we ran into, the software we used, and the performance we achieved. Read on and enjoy!

Introduction

For some time now, I've been thinking about building a personal, portable Beowulf cluster. The idea began back in 1997, at a National Science Foundation workshop. A bunch of us attended this workshop and learned about parallel computing, using the latest version of MPI (the Message Passing Interface). After the meeeting we went home realizing we had no such hardware with which to practice what we had learned. Those of us who were lucky got grants to build our own Beowulf clusters; others had to settle for running their applications on networks of workstations.

Even with a (typical) multiuser Beowulf cluster, I have to submit jobs via a batch queue, to avoid stealing the CPU cycles of other users of the cluster. This isn't too bad when I'm running operational software, but it is a real pain when I'm developing new parallel software.

So at the risk of sounding selfish, what I would really like -- especially for development purposes -- is my own, personal, Beowulf cluster. My dream machine is small enough to sit on my desk, with a footprint similar to that of a traditional PC tower. The system must also plug into a normal electrical outlet, and runs at room temperature without any special cooling beyond my normal office air conditioning.

By late 2006, two hardware developments had made it possible to realize my dream:

- Multicore CPUs became the standard for desktops and laptops; and

- Gigabit Ethernet (GigE) became the standard on-board network adaptor.

In the fall of 2006, the CS Department at Calvin College provided Tim Brom and I with a small in-house grant of $2500 to try to build such a system. The goals of the project were to build a cluster:

- Costing $2500 or less,

- Small enough to fit on my desk, or fit in a checked-luggage suitcase,

- Light enough to carry to my car (by hand),

- Powerful enough to provide at least 20 GFLOPs of measured performance:

- for personal research,

- for the High Performance Computing course I teach,

- for demonstrations at professional conferences, local high schools, and so on,

- Powered via one line plugged into a standard 120V wall outlet, and

- Running at room temperature.

There have been previous efforts at either small transportable clusters or clusters with a very low $/GFLOP. While I don't want to list every one of these efforts, I do think it's worthwhile to acknowledge these efforts. In the category of transportable clusters, the nominees are:

As you can see the nominees for transportable Beowulf systems is short but distinguished. Let's move on to clusters that are gunning for the price/performance crown.

- 2005: Kronos

- 2003: KASY0

- 2002: Green Destiny

- 2001: The Stone Supercomputer

- 2000: KLAT2

- 2000: bunyip

- 1998: Avalon

There are more lower cost clusters that have laid claim at one time or another to being the price/performance king. As we'll see, Microwulf has the lowest price/performance ratio ($94.10/GFLOP in January 2007, $47.84/GFLOP in August 2007). than any of these.

Designing the System

Microwulf is intended to be a small, cost-efficient, high-performance, portable cluster. With this set of somewhat conflicting goals, we set out to design our system.

Back in the late 1960s, Gene Amdahl laid out a design principle for

computing systems that has come to be known as "Amdahl's Other Law".

We can summarize this principle as follows:

To be balanced,

the following characteristics of a computing system should all be the same:

The basic idea is that there are three different ways to starve a computation: deprive it of CPU; deprive it of the main memory it needs to run; and deprive it of I/O bandwidth it needs to keep running. Amdahl's "Other Law" says that to avoid such starvation, you need to balance a system's CPU speed, the available RAM, and the I/O bandwidth.

For Beowulf clusters running parallel computations, we can translate Amdahl's I/O bandwidth into network bandwidth, at least for communication-intensive parallel programs. Our challenge is thus to design a cluster in which the CPUs' speeds (GHz), the amount of RAM (GB) per core, and the network bandwidth (Gbps) per core are all fairly close to one another, with the CPU's speeds as high as possible, while staying within our $2500 budget.

With Gigabit Ethernet (GigE) the defacto standard these days, that means our network speed is limited to 1 Gbps, unless we want to use a faster, low-latency interconnect like Myrinet, but that would completely blow our budget. So using GigE as our interconnect, a perfectly balanced system would have 1 GHz CPUs, and 1 GB of RAM for each core.

After much juggling of budget numbers on a spreadsheet, we decided to go with a GigE interconnect, 2.0 GHz CPUs, and 1 GB of RAM per core. This was purely a matter of trying to squeeze the most bang for the buck out of our $2500 budget, using Jan 2007 prices.

For our CPUs, we chose AMD Athlon 64 X2 3800 AM2+ CPUs. At $165 each in January 2007, these 2.0 GHz dual-core CPUs were the most cost-efficient CPUs we could find. (They are even cheaper now - about $65.00 on 8/1/07).

To keep the size as small as possible we chose MSI Micro-ATX motherboards. These boards are fairly small (9.6" by 8.2") and have an AM2 socket that supports AMD's multicore Athlon CPUs. More precisely, we used dual-core Athlon64 CPUs to build an 8-core cluster, but we could replace those CPUs with AMD quad-core Athlon64 CPUs and have a 16-core cluster, without changing anything else in the system!

These boards also have an on-board GigE adaptor, and PCI-e expansion slots. This, coupled with the low cost of GigE NICs ($41), let us add a GigE NIC to one of the PCI-e slots on each motherboard, to try to better balance the CPU speeds and network bandwidth. This gave us 4 on-board adaptors, plus 4 PCI-e NICS, for a total of 8 GigE channels, which we connected using an inexpensive ($100) 8-port Gigabit Ethernet switch. Our intent was to provide sufficient bandwidth for each core to have its own GigE channel, to make our system less imbalanced with respect to CPU speed (two x 2 GHz cores) and network bandwidth (two x 1 Gbps adaptors). This arrangement also let us experiment with channel bonding the two adaptors, experiment with HPL using various MPI libraries using one vs two NICs, experiment with using one adaptor for "computational" traffic and the other for "administrative/file-service" traffic, and so on.)

We equipped each motherboard with 2 GB (paired 1GB DIMMs) of RAM -- 1 GB for each core. This was only half of what a "balanced" system would have, but given our budget constraints, it was a necessary compromise, as these 8 GB consumed about 40% of our budget.

To minimize our system's volume, we chose not to use cases. Instead we went with an "open" design based loosely on those of Little Fe and this cluster. We chose to mount the motherboards directly on some scrap Plexiglas from our shop. (Scavenging helped us keep our costs down.) We then used threaded rods to connect and space the Plexiglas pieces vertically.

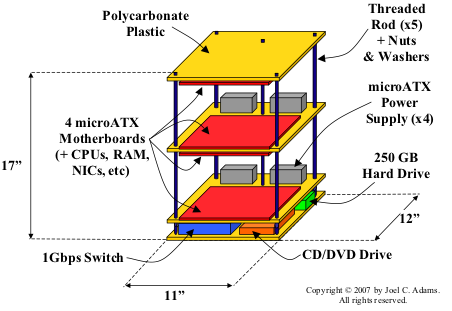

The bottom layer is a "sandwich" of two pieces of Plexiglas, between which are (i) our 8-port Gigabit Ethernet switch, (ii) a DVD drive, and (iii) a 250 GB drive. Above this "sandwich" are two other pieces of Plexiglas, spaced apart using the threaded rods, as shown in Figure One below:

As can be seen in Figure One, we chose to mount the motherboards on the underside of the top piece of Plexiglas, on both the top and the bottom of the piece of Plexiglas below that. and on the top of the piece below that. Our aim in doing so was to minimize Microwulf's height. As a result, the top motherboard is facing down, the bottom-most motherboard is facing up, one of the two "middle" motherboards faces up, and the other faces down, as Figure One tries to show.

Since each of our four motherboards is facing another motherboard, which is upside-down with respect to it, the CPU/heatsink/fan assembly on one motherboard lines up with the PCI-e slots in the motherboard facing it. As we were putting a GigE NIC in one of these PCI-e slots, we adjusted the spacing between the Plexiglas pieces so as to leave a 0.5" gap between the top of the fan on the one motherboard and the top of the NIC on the opposing motherboard. As a result, we have about a 6" gap between the motherboards, as can be seen in Figure Two:

(Jeff notes: These boards have a single PCI-e x16 slot so in the future a GPU could be added to each node for added performance).

To power everything, we used four 350W power supplies (one per motherboard). We used 2-sided carpet tape to attach two power supplies to each of the two middle pieces of Plexiglass, and then ran their power cords to a power strip that we attached to the top piece of Plexiglass, as shown in Figure Three:

(We also used adhesive velcro strips to secure the hard drive, CD/DVD drive, and network switch to the bottom piece of Plexiglass.)

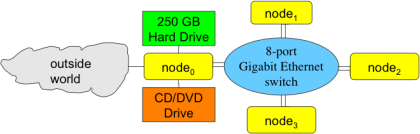

We wanted Microwulf to be able to access the outside world

(e.g., to download software updates and the like), so we

decided to configure the bottom motherboard and CPU

as a head node for the cluster.

To do so, we attached the 250GB hard drive to that board,

and configured it to boot from that drive.

We did the same for the CD/DVD drive shown in Figure One,

to simplify software installation, data backup, and so on.

Since our campus network uses Fast Ethernet, we added a 10/100 NIC to the head node's PCI slot, to allow the head node to communicate with the outside world.

For simplicity, we made the top three three nodes diskless, and used NFS to export the space on the 250 GB drive to them. Figure Four below shows the logical layout of Microwulf:

Note that each node has two separate lines running from itself to the network switch.

With four CPUs blowing hot air into such a small volume, we thought we should keep the air moving through Microwulf. To accomplish this, we decided to purchase four Zalman 120mm case fans ($8 each) and grills ($1.50 each). Using scavenged twist-ties, we mounted two fans -- one for intake and one for exhaust -- on opposing sides of each pair of facing motherboards. This keeps air moving across the boards and NICs; Figure Five shows the two exhaust fans:

So far, this arrangement has worked very well: under load, the on-board temperature sensors report temperatures about 4 degrees above room temperature.

Last, we grounded each component (motherboards, hard drive, etc.) by wiring them to one of the power supplies.

As you can tell from some of our decisions, we had to make several tradeoffs to stay within our budget. Here's our final hardware listing with the prices we paid in January 2007.

| Component | Product | Unit Price | Quantity | Total |

| Motherboard | MSI K9N6PGM-F MicroATX | $80.00 | 4 | $320.00 |

| CPU | AMD Athlon 64 X2 3800+ AM2 CPU | $165.00 | 4 | $660.00 |

| Main Memory | Kingston DDR2-667 1GByte RAM | $124.00 | 8 | $992.00 |

| Power Supply | Echo Star 325W MicroATX Power Supply | $19.00 | 4 | $76.00 |

| Network Adaptor | Intel PRO/1000 PT PCI-Express NIC (node-to-switch) | $41.00 | 4 | $164.00 |

| Network Adaptor | Intel PRO/100 S PCI NIC (master-to-world) | $15.00 | 1 | $15.00 |

| Switch | Trendware TEG-S80TXE 8-port Gigabit Ethernet Switch | $75.00 | 1 | $75.00 |

| Hard drive | Seagate 7200 250GB SATA hard drive | $92.00 | 1 | $92.00 |

| DVD/CD drive | Liteon SHD-16S1S 16X | $19.00 | 1 | $19.00 |

| Cooling | Zalman ZM-F3 120mm Case Fans | $8.00 | 4 | $32.00 |

| Fan protective grills | Generic NET12 Fan Grill (120mm) | $1.50 + shipping |

4 | $10.00 |

| Support Hardware | 36" x 0.25" threaded rods | $1.68 | 3 | $5.00 |

| Fastener Hardware | Lots of 0.25" nuts and washers | $10.00 | ||

| Case/shell | 12" x 11" polycarbonate pieces (scraps from our Physical Plant) |

$0.00 | 4 | $0.00 |

| Total | $2,470.00 | |||

Non-Essentials

| Component | Product | Unit Price | Quantity | Total |

| KVM Switch | Linkskey LKV-S04ASK | $50.00 | 1 | $50.00 |

| Total | $50.00 | |||

Aside from the support and fastener hardware (purchased at Lowes), the fan

grills and the KVM switch (purchased at newegg.com),

all of these components were purchased through:

N F P Enterprises

1456 10 Mile Rd NE

Comstock Park, MI 49321-9666

(616) 887-7385

So we were able to keep the price for the whole system to just under $2,500. That's 8 cores with 8 GB of memory and 8 GigE NICs for under $2,500, or about $308.75 per core.

The Devil is in the Details

In the previous section, I talked mostly about a hardware list of what we used in building Microwulf. However, there were a number of small 'gotchas' that we encountered that we think should be shared. Hopefully by sharing them other people won't have to spend too much time recreating the solutions.

One of the first things we really thought about and actually spent some time thinking out is what Linux distribution we would use. It's funny how something like a Linux distribution will create anguish when making a decision or spark such debate. We don't see people facing such anguish over Windows (2000?, XP?, Vista?, Oh My!) or any of the BSD flavors, but you definitely will see a great deal of (heated) debate for all OS's.

For various reasons, we have been a Gentoo shop for a while. So it would seem fairly obvious that we would use Gentoo. But over time we had found Gentoo to be something of an administrative hassle. Since we wanted to keep Microwulf relatively simple (as simple as possible but not overly simple). and we had experience with Ubuntu, we decided to give that a try first.

We installed Ubuntu Desktop on the head node. We used Ubuntu 6.10 (Edgy) as it was the latest stable release of Ubuntu at the time. It had a 2.6.17 kernel. It was a very easy installation except for one thing - the driver for the on-board NIC was not included in the kernel until 2.6.18. So for the first two months of Microwulf's young life, we used an alpha version of Ubuntu 7.04 (Fiesty) with had a 2.6.20 kernel and the drivers that we needed. 7.04 finally went stable in April, but we never had any trouble with the alpha version.

We installed Ubuntu Server on each of the top 3 "compute" nodes, since it did not carry all of the overhead of Ubuntu Desktop.

We also looked at several cluster management packages: ROCKS, Oscar, and Warewulf. Since our nodes, with the exception of the head node, are diskless, that ruled out ROCKS and Oscar, since, as far as we could tell, they didn't support diskless nodes. Warewulf looks like it would have worked well, but we didn't see that it would give us anything extra considering that this cluster is so small. So, we decided to go with an NFSroot approach to Microwulf.

Before we actually did the NFSroot for the "compute" nodes, we considered the idea of using iSCSI instead of NFS, mainly based on a paper we found. But we decided that it was more important to get the cluster up and running, and Ubuntu makes NFSroot really easy. It's as simple as editing the /etc/initramfs.conf file to generate an initial ramdisk that does NFSroot and then setting up DHCP/TFTP/PXELinux on the head node, as you would for any diskless boot situation.

We did configure the network adaptors differently: we gave each onboard NIC an address on a 192.168.2.x subnet, and gave each PCI-e NIC an address on a 192.168.3.x subnet. Then we routed the NFS traffic over the 192.168.2.x subnet, to try to separate "administrative" traffic from computational traffic. It turns out that OpenMPI will use both network interfaces (see below), so this served to spread communication across both NICs.

One of the problems we encountered is that the on-board NICs (Nvidia) present soem difficulties. After our record setting run (see the next section) we started to have trouble with the on-board NIC. After a little googling, we added the following option to the forcedeth module options:

forcedeth max_interrupt_work=35

The problem got better, but didn't go away. Originally we had the onboard Nvidia GigE adaptor mounting the storage. Unfortunately, when the Nvidia adaptor started to act up, it reset itself, killing the NFS mount and hanging the "compute" nodes. We're still working on fully resolving this problem, but it hasn't kept us from benchmarking Microwulf.

Performance: Now We're Talking

Once Microwulf was built and functioning it's fairly obvious that we wanted to find out how 'fast' it was. Fast can have many meanings, depending upon your definition. But since the HPL benchmark is the standard used for the Top500 list, we decided to use it as our first measure of performance. Yes, you can argue and disagree with us, but we needed to start somewhere.

We installed the development tools for Ubuntu (gcc-4.1.2) and then built both Open MPI and MPICH. Initially we used OpenMPI as our MPI library of choice and we had both GigE NICs configured (the on-board adaptor and the Intel PCI-e NIC that was in the x16 PCIe slot).

Then we built the GOTO BLAS library, and HPL, the High Performance Linpack benchmark.The Goto BLAS library built fine, but when we tried to build HPL (which uses BLAS), we got a linking error indicating that someone had left a function named main() in a module named main.f in /usr/lib/libgfortranbegin.a. This conflicted with main() in HPL. Since a library should not need a main() function, we used ar to remove the offending module from /usr/lib/libgfortranbegin.a, after which everything built as expected.

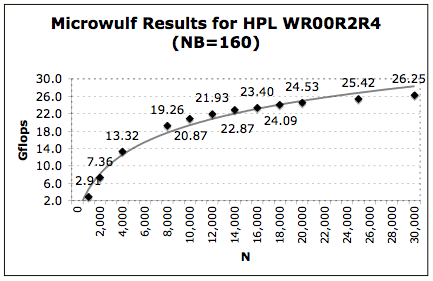

Next, we started to experiment with the various parameters for running HPL - primarily problem size and process layout. We varied PxQ between {1x8, 2x4}, varied NB between {100, 120, 140, 160, 180, 200}, and used increasing values of N (problem size) until we ran out of memory. As an example of the tests we did, Figure Six below is a plot of the HPL performance in GFLOPS versus the problem size N.

For Figure Six we chose PxQ=2x4, NB=160, and varied N from a very small number up to 30,000. Notice that above N=10,000, Microwulf achieves 20 GLFOPS, and with N greater than 25,000, it exceeds 25 GFLOPS. Anything above N=30,000 produced "out of memory" errors.

We did achieve a peak performance of 26.25 GFLOPS. The theoretical peak performance for Microwulf is 32 GLFOPS. (Eight cores x 2 GHz x 2 double-precision units per core.) This means we have hit about 82% efficiency (which we find remarkable). Note that one of the reasons we asume that we achieved such a high efficiency is due to Open MPI, which will use both GigE interfaces. It will round-robin data transfers over the various interfaces unless you explicitly tell it to just use certain interfaces.

It's important to note that this performance occurred using the default system and Ethernet settings. In particular, we did not tweak any of Ethernet parameters mentioned in Doug Eadline and Jeff Layton's article on cluster optimization. We were basically using "out of the box" settings for these runs.

To assess how well our NICs were performing, Tim did some followup HPL runs, and used netpipe to gauge our NICs latency. Netpipe reported 16-20 usecs (microseconds) latency on the onboard NICs, and 20-25 usecs latency on the PCI-e NICs, which was lower (better) than we were expecting.

As a check on performance we also tried another experiment. We channel bonded the two GigE interfaces to produce, effectively, a single interface. We then used MPICH2 with the channel bonded interface and used the same HPL parameters we found to be good for Open-MPI. The best performance we achieved was 24.89 GLOPS (77.8% efficiency). So it looks like Open MPI and multiple interfaces beats MPICH2 and a bonded interface.

Another experiment we tried was to use Open MPI and just the PCI-e GigE NIC. Using the same set of HPL parameters we have been using we achieved a performance of 26.03 GFLOPS (81.3% efficiency). This is fairly close to the performance we obtained when using both interfaces. This suggests that the on-board NIC isn't doing as much work as we thought. We plan to investigate this more in the days ahead.

Now, let's look at the performance of Microwulf in relation to the Top500 list to see where it would have placed. Going through the archived lists, here is where Microwulf would have placed.

- Nov. 1993: #6

- Nov. 1994: #12

- Nov. 1995: #31

- Nov. 1996: #60

- Nov. 1997: #122

- Nov. 1998: #275

- June 1999: #439

- Nov. 1999: Off the list

Looking back at the lists is really a lot of fun. In November 1993 Microwulf would have been the 6th fastest machine on the planet! That's not bad considering that was only 14 years ago. As recent as 8 years ago, Microwulf would have ranked 439th on the list. That's not bad for a little 4 node, 8 core cluster measuring 11" x 12" x 17".

If you dig a little further, we learn some more interesting things about Microwulf and it's performance compared to historic supercomputers. In Nov. 1993, when Microwulf would have been in 6th place, the 5th place machine was a Thinking Machines CM-5/512 with 512 CPUs, which produced about 30 GFLOPS. So the performance of Microwulf's 4 CPUs is pretty close to the CM-5's 512 CPUs -- a factor of 64!

In Nov. 1996, when Microwulf would have been in 60th place, the next machine on the list is a Cray T3D MC256-8 with 256 CPUs. It's kind of interesting to realize that Microwulf with 8 cores is faster than an 11 year old Cray with 256 CPUs. Not to mention the price difference -- a T3D cost millions of dollars! It puts into perspective the cluster and commodity revolution.

Efficiencies - World Record Time

Now, let's look at some metrics to determine how good Microwulf is or isn't. Believe it or not, these numbers can tell you a lot about your system. If we take the January 2007 price of Microwulf, $2,470, and divide by its 26.25 GFLOP performance, we can see that Microwulf achieves $94.10/GFLOP! So Microwulf has broken the proverbial $100/GFLOPS barrier (for double precision results)! Break out the champagne!

With energy prices rising, another useful metric to examine is the power/performance ratio. This ratio is increasingly important to big cluster farms (e.g., Google), and could become a new metric that people watch very closely. For Microwulf we have measured the total power consumption at 250W at idle (a bit over 30W per core) and 450W when under load. This works out to 17.14 W/GFLOP under load. To make this number more meaningful, let's compare it with the performance/power numbers of some other systems.

One system that was designed for low power consumption is Green Destiny, which used very low power Transmeta CPUs and required very little cooling. The 240 CPUs in Green Destiny consumed about 3.2 kW, and the machine achieved about 101 GFLOPS. This gives Green Destiny a performance/power metric of 31W/GFLOP. This is almost 2 times worse than Microwulf.

Another interesting comparison is to the Orion Multisystems clusters. Orion is no longer around, but a few years ago they sold two commercial clusters: a 12-node desktop cluster (the DS-12) and a 96-node deskside cluster (the DS-96). Both machines used Transmeta CPUs. The DS-12 used 170W under load, and its performance was about 13.8 GFLOPS. This gives it a performance/power ratio of 12.31W/GLFOP (much better than Microwulf). The DS-96 consumed 1580W under load, with a performance of 109.4 GFLOPS. This gives it a performance/power ratio of 14.44W/GFLOP, which again beats Microwulf.

Another way to look at power consumption and price is to use the metric from Green 500. Their metric is MFLOPS/Watt (the bigger the number the better). Microwulf comes in at 58.33, the DS-12 is 81.18, and the deskside unit is 69.24. So using the Green 500 metric we can see that the Orion systems are more power efficient than Microwulf. But let's look a little deeper at the Orion systems.

The Orion systems look great at Watts/GFLOP and considering the age of the Transmeta chips, that is no small feat. But let's look at the price/performance metric. The DS-12 desktop model had a list price of about $10,000, giving it a price/performance ratio of $724/GFLOP. The DS-96 deskside unit had a list price of about $100,000, so it's price/performance is about $914/GFLOP. That is, while the Orion systems were much more power efficient, their price per GFLOP is much higher than that of Microwulf, making them much less cost efficient than Microwulf.

Since Microwulf is better than the Orion systems in price/performance, and the Orion systems are better than Microwulf in power/performance, let's try some experiments with metrics to see if we can find a useful way to combine the metrics. Ideally we'd like a single metric that encompasses a system's price, performance, and power usage. As an experiment, let's compute MFLOP/Watt/$. It may not be perfect, but at least it combines all 3 numbers into a single metric, by extending the Green 500 metric to include price. You want a large MFLOP/Watt to get the most processing power per unit of power as possible. We also want price to be as small as possible so that means we want the inverse of price to be as large as possible. This means that we want MFLOP/Watt/$ to be as large as possible. With this in mind, let's see how Microwulf and Orion did.

- Microwulf: 0.2362

- Orion DS-12: 0.00812

- Orion DS-96: 0.00069

From these numbers (even though they are quite small), Microwulf is almost 3 times better than the DS-12 and almost 35 times better than the DS-96 using this metric. We have no idea if this metric is truly meaningful but it give us something to ponder. It's basically the performance per unit power per unit cost. (OK, that's a little strange, but we think it could be a useful way to compare the overall efficiency of different systems.)

We might also compute the inverse of the MFLOP/Watt/$ metric: -- $/Watt/MFLOP -- where you want this number to be as small as possible. (You want price to be small and you want Watt/MFLOP to be small). So using this metric we can see the following:

- Microwulf: 144,083

- Orion DS-12: 811,764

- Orion DS-96: 6,924,050

This metric measures the price per unit power per unit performance. Comparing Microwulf to the Orion systems, we find that Microwulf is about 5.63 times better than the DS-12, and 48 times better than the DS-96. It's probably a good idea to stop here, before we drive ourselves nuts with metrics.

Summary

We've seen that Microwulf is a 4-node, 8-core Beowulf cluster, measuring just 11" x 12" x 17", making it small enough to fit on a person's desktop, or fit into a checked-luggage suitcase for transport on an airplane.

Despite its small size, Microwulf provides measured performance of 26.25 GFLOPS on the HPL benchmark, and it cost just $2470 to build in January 2007. This gives it a price/performance ratio of $94.10/GFLOP!

Microwulf's excellent performance is made possible by:

- Multi-core CPUs becoming the standard. This makes it possible to cram a lot of processing elements in a small volume.

- Decreasing memory prices. While still the single most expensive part of Microwulf, memory prices continue to decline (see below), making it possible to avoid starving a computation of memory.

- Gigabit Ethernet becoming the standard. On-board GigE adaptors, inexpensive GigE NICs, and inexpensive GigE switches allow Microwulf to offer enough network bandwidth to avoid starving a parallel computation with respect to communication.

We hope we have whetted your interest in building a Microwulf of your own! We encourage you to take our basic Microwulf design and vary it in whatever way suits your fancy.

For example, aside from the fans, the one moving (i.e., likely to fail) component in Microwulf is the 250GB hard drive. With 4GB flash drives dropping in price, it would be an interesting experiment to replace the hard drive with 1-4 flash drives, to see if/how that affects performance.

Since memory prices have dropped since January, another variation would be to build a Microwulf that is balanced with respect to memory (16 GB of RAM, 2 GB per core). Recalling that HPL kept running out of memory when we increased N above 30,000, it would be interesting to see how many more FLOPS one could eke out with more RAM. The curve in Figure Six suggests that performance is beginning to plateau, but there still looks to be room for improvement there.

Since our MSI motherboards use AM2 sockets, another variation would be to build the same basic system, but replace our dual-core Athlon64s with AMD's new quad-core Athlon64 CPUs. (Hopefully available by the time you read this article.) That will give you a 16-core cluster in the same volume as our 8-core cluster. It would be interesting to see how much of a performance boost the new quad cores would provide; whether the price/performance is better or worse than that of Microwulf; the effect of additional GigE NICs on performance; and so on.

These are just a few of the many possibilities out there. If you build a Microwulf of your own, let us know -- we'd love to hear the details!

For more information (especially pictures) of Microwulf, please see our project site. Thanks for reading!

Joel Adams is professor of computer science at Calvin College. He earned his PhD in computer science from the University of Pittsburgh in 1988. He does research on Beowulf cluster interconnects, is the author of several books on computer programming, and has twice been named as a Fulbright Scholar (Mauritius 1998, Iceland 2005).

Tim Brom is a graduate student in computer science at the University of Kentucky. He received his Bachelor of Computer Science degree in May 2007 from Calvin College.

Epilogue: Prices for Microwulf (August 2007)

System component prices are dropping rapidly. CPUs, memory, network, hard drives - all are dropping at a pretty good clip. So how much would we pay if we built Microwulf in August, 2007? Let's find out using Newegg for our prices.

| Component | Product | Unit Price | Quantity | Total |

| Motherboard | MSI K9N6PGM-F MicroATX | $50.32 | 4 | $201.28 |

| CPU | AMD Athlon 64 X2 3800+ AM2 CPU | $65.00 | 4 | $260.00 |

| Main Memory | Corsair DDR2-667 2 x 1GByte RAM | $75.99 | 4 | $303.96 |

| Power Supply | LOGISYS Computer PS350MA MicroATX 350W Power Supply | $24.53 | 4 | $98.12 |

| Network Adaptor | Intel PRO/1000 PT PCI-Express NIC (node-to-switch) | $34.99 | 4 | $139.96 |

| Network Adaptor | Intel PRO/100 S PCI NIC (master-to-world) | $15.30 | 1 | $15.30 |

| Switch | SMC SMCGS8 10/100/1000Mbps 8-port Unmanaged Gigabit Switch | $47.52 | 1 | $47.52 |

| Hard drive | Seagate 7200 250GB SATA hard drive | $64.99 | 1 | $64.99 |

| DVD/CD drive | Liteon SHD-16S1S 16X | $23.83 | 1 | $23.83 |

| Cooling | Zalman ZM-F3 120mm Case Fans | $14.98 | 4 | $59.92 |

| Fan protective grills | Generic NET12 Fan Grill (120mm) | $6.48 | 4 | $25.92 |

| Support Hardware | 36" x 0.25" threaded rods | $1.68 | 3 | $5.00 |

| Fastener Hardware | Lots of 0.25" nuts and washers | $10.00 | ||

| Case/shell | 12" x 11" polycarbonate pieces (scraps from our Physical Plant) |

$0.00 | 4 | $0.00 |

| Total | $1,255.80 | |||

(Check the price links above -- by the time you read this, the prices have probably dropped even lower!)

Assuming that we'll get about the same performance and the same power consumed under load, this means Microwulf's August 2007 price/performance ratio would be $47.84/GFLOP, breaking the $50/GFLOP barrier! What a price drop!

The power/performance numbers stay the same (Watt/GFLOP and MFLOP/Watt).

The metrics that combine price, performance, and power are interesting. The MFLOP/W/$ is 0.04645, which is almost twice as good as the original Microwulf. The $/Watt/MFLOP is 73,255, which is again about twice as good as the original Microwulf.

Our special thanks go out to Jeff Layton, who did the lion's share in putting this article together, working out the metrics, and so on. Thank you, Jeff!