Static or Dynamic? It is a matter of balance

Now that we know how to identify parallel parts of our program, the question is now what to do with this knowledge. Or, how do you write a parallel program. To answer this question, we will discuss what the structure of a parallel program may look like. Programs can be organized in different ways. We already discussed SPMD (Single Program Multiple Data) and MPMD (Multiple Programs Multiple Data) models. SPMD and MPMD represents the way a program looks from the point of view of the cluster. Note, that using a MPMD model with MPI an "app" or "procgroup" file will be needed to start different programs on cluster nodes. Let's see what the programs look from the implementation standpoint.

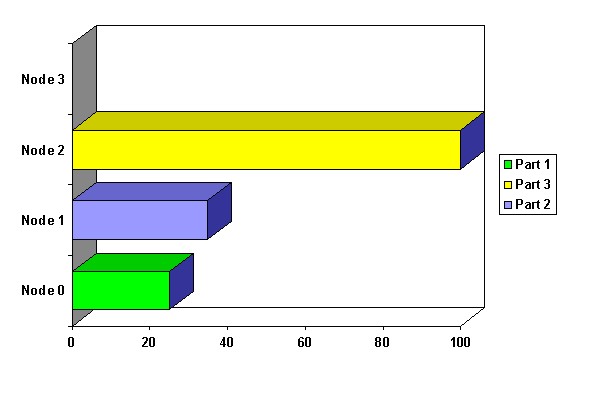

There are a variety ways to structure parallel codes. In this article we explore three common models that have been successfully used in tools like BERT 77, a Fortran conversion tool. As we know,a parallel program may contain different concurrent parts. When making these parts parallel one may find that the processor load may vary quite a bit. We will ignore data transfers, and only look at the efficiency of the parallel code, i.e. load balance.

An Example

Let's explore an example:

CALL S1(X)

DO I = 1, N

CALL S2(Y(I))

ENDDO

CALL S3(Z)

These subroutines have no side effects, and our program has three independent parts: CALL S1, CALL S3 and the DO LOOP. We also assume the loop is concurrent. The execution times are as follows:

| Part | Time |

|---|---|

| S125 ms | |

| LOOP100 ms | |

| S335 ms |

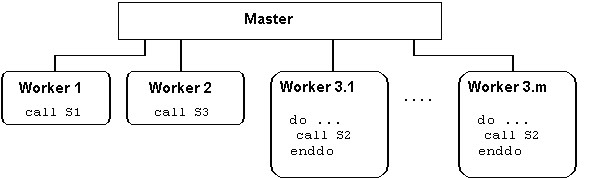

| Sidebar: Flow Algorithm Template |

|

master

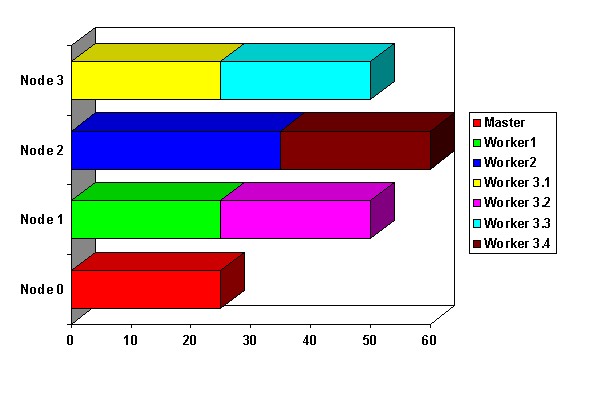

n_workers = m+2

balance workers to workload

do k = 1, n_nodes

transfer data to node k

enddo

do k = n_nodes+1, n_workers

wait for a worker to finish

receive data from worker w

transfer data to worker w

enddo

do k = 1, n_nodes

wait for finishing worker

receive data from worker

enddo

worker

do while(not_finished)

receive job from master

if(job .eq. job1) then

receive x

call s1(x)

send x to master

elseif (job .eq. job2) then

receive imin, imax, y(imin: imax)

do i =imin, imax

call s2(y(i))

enddo

send y(imin: imax)

elseif (job .eq. job3) then

receive z

call s3(z)

send z to master

endif

|

As you can see in the program, master assigns jobs to first n_nodes workers. Then the master monitors the state of the workers. When a worker finishes, the master assigns another job to a worker. When all the jobs are assigned, the master simply waits for the remaining data.

This work is licensed under CC BY-NC-SA 4.0