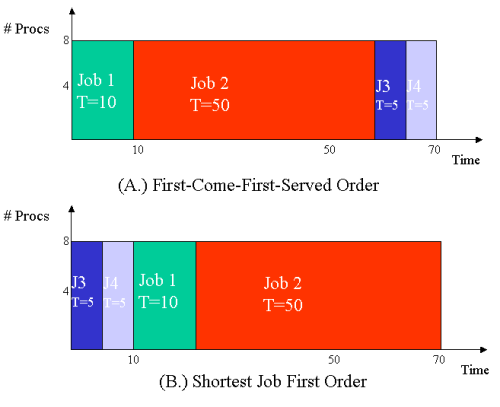

Let's look at a simple example. Suppose you have a small, 8 node cluster with single processor nodes, and suppose you are fortunate enough to have jobs that all require 8 processors, but the time to execute these jobs vary. Suppose at a given time four of these jobs are sitting in the queue waiting for execution, with a 10 minute job first, a 50 minute job second, and two 5 minute jobs after that. Figure One (a) shows a simple line graph of what would happen if these jobs were executed in the order received, a First-Come, First-Served (FCFS) scheduling algorithm. In this example, utilization is 100%, and you complete all four jobs in 70 minutes, so your average throughput is 1 job every 14 minutes. Since all processors were is use all the time in this ideal case, it's impossible to improve utilization and throughput. So, what's left for a scheduler to do? Well, let's look at the turnaround time. The first job completes 10 minutes after the start of our run. The second one completes 50 minutes later, or 60 minutes after the starting time, the third at 65, and the fourth after 70 minutes. So, our average turnaround time is (10 + 60 + 65 + 70)/4, which means each of our users waited on average 51.25 minutes for their jobs to complete. Now, let's try the same jobs with a different scheduling order. In Figure One (b), you'll see the same four jobs run Shortest-Job-First (SJF). Utilization is still 100%, throughput is still one job every 14 minutes, but now, the average turnaround time is (5 + 10 + 20 + 70)/4, or 21.25 minutes. So, on average each user spends a half hour less waiting for their results. A cluster running the SJF scheduler is going to seem much faster!

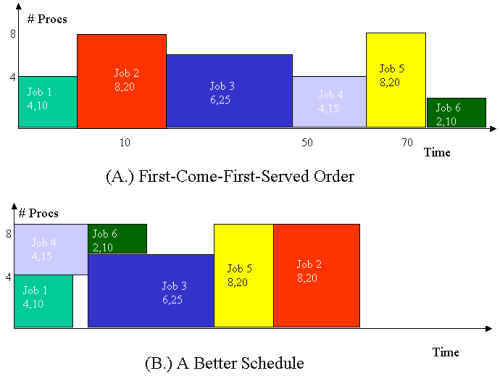

Most situations aren't quite so neat when you are dealing with clusters, as it is unlikely that each job will need the whole cluster. So, when deciding which jobs to run next, the scheduler needs to take into account both the number of processors the job needs and how long it is likely to run. Figure Two shows two scenarios with jobs of various size in both these dimensions. The top graph show a FCFS execution of these jobs, and the bottom shows a better schedule. Here, the scheduling isn't purely shortest job first, but rather a more complicated system where multiple jobs may be active at the same time (though still only one task on any given processor). The schedule shown in Figure Two (b) improves not only turnaround time, but utilization and throughput as well.

Real schedulers have a much harder problem. In both the examples above, I made two completely fallacious assumptions; that all jobs had arrived in the queue at the time the scheduler planned how to schedule them, and, more importantly, that the scheduler knew with perfect accuracy how long jobs would take before it executed them. In practice, schedulers must be able to accommodate new jobs arriving at random times, and wildly inaccurate user estimates of runtime. Plus, different jobs might differ in importance, and jobs may crash or hang. Most modern resource management systems also have more than one queue, to separate jobs of different length or different priority (for instance, to separate paying clusters from your ongoing search for extra-terrestrial intelligence). But even these simple examples show that the choice of scheduler can have a huge impact on the performance of your cluster.

Conclusions

This column has looked at the fundamental concepts of resource management, and hopefully given you some insight into why this is an important issue. Next month, we'll talk some more about available scheduling software, including the packages mentioned in this article (PBS in it's various forms, Maui, and LSF) along with Sun'sGridEngine, IBM's LoadLeveler, and perhaps a few others. We'll get into the critical issue of user interface, and show some quick configuration examples.This article was originally published in ClusterWorld Magazine. It has been updated and formatted for the web. If you want to read more about HPC clusters and Linux you may wish to visit Linux Magazine.

Dan Stanzione is currently the Director of High Performance Computing for the Ira A. Fulton School of Engineering at Arizona State University. He previously held appointments in the Parallel Architecture Research Lab at Clemson University and at the National Science Foundation.