In this installment, the value cluster is given tools that do the heavy lifting. Both an LAM/MPI and SGE (Sun Grid Engine) will be installed and tested. Other nifty tools will be discussed as well.

In the last part of our story, we were about to name the key witness in the, no wait, wrong story. We talked about installing Warewulf on the master node and then booting the compute nodes. At the end of the article we were able to boot all of the nodes and run wwtop to see that the nodes were alive and breathing.

While the cluster was up and running, it didn't have all of the "fun" toys yet. In particular, we wanted to run the Top500 benchmark (HPL) and see where we placed on the list (for the serious readers that is joke). Before we do that, however, we need more software installed and more importantly we need to know if everything is working correctly.

As you may recall, in part one we discussed the hardware and in part two we installed the Warewulf software. So if you have been looking at wwtop for a while, we are here to get more of those lights blinking. We are going to do some configuration and some package installation. In the end, you will have a good grasp of how your cluster is running and be able to run programs from the command prompt and by using a batch queuing system. Along the way, you will also get a better appreciation for how the Warewulf software actually works. Our cluster is named kronos, so if you are following along references to kronos will mean "your cluster".

Our own VNFS

Because we are going to start changing things, let's first create our own VNFS (Virtual Node File System) and keep the default as a backup. As you may recall the VNFS is the filesystem image that is used to make the RAM disk image used by Warewulf. We decided to name the VNFS, kronos (creative aren't we?). So first, we modified the file /etc/warewulf/nodes.conf to use our VNFS. We changed the line,

vnfs = defaultto reflect our cluster name,

vnfs = kronosThen we modified the file /etc/warewulf/vnfs.conf to reflect the changes we wanted. These changes tell the build scripts what we want in the VNFS and modules we want with the kernel. The changes are shown in Sidebar One.

| Sidebar One: Kronos vnfs.conf |

|

The following the our new vnf.conf file. We added the sis900 modules and removed some modules we will not need. We then added a entry for a kronos VNFS and set it as default. Notice the different ways you can configure the VNFS. We will explain more of these options in the text.

kernel modules = modules.dep sunrpc lockd nfs jbd ext3 mii crc32 e100 e1000 bonding sis900

kernel args = init=/linuxrc

path = /kronos # relative to 'vnfs dir' in master.conf

[generic]

excludes file = /etc/warewulf/vnfs/excludes

sym links file = /etc/warewulf/vnfs/symlinks

fstab template = /etc/warewulf/vnfs/fstab

[small]

excludes file = /etc/warewulf/vnfs/excludes-aggressive

sym links file = /etc/warewulf/vnfs/symlinks

fstab template = /etc/warewulf/vnfs/fstab

[hybrid]

ramdisk nfs hybrid = 1

excludes file = /etc/warewulf/vnfs/excludes-nfs

sym links file = /etc/warewulf/vnfs/symlinks-nfs

fstab template = /etc/warewulf/vnfs/fstab-nfs

[kronos]

default = 1 # Make this VNFS the default

excludes file = /etc/warewulf/vnfs/excludes-aggressive

sym links file = /etc/warewulf/vnfs/symlinks

fstab template = /etc/warewulf/vnfs/fstab

|

Now that we have defined our VNFS, let's create it! Run the following command to create the VNFS:

# wwvnfs.createThis command will populate the /vnfs/kronos directory with a new file system. You can look in the directory /vnfs/kronos to see what the command did. To build the actual image that will be used for the nodes from this VNFS, run the following command:

# wwvnfs.buildIn the /tftpboot directory, you will see the file kronos.img.gz which is the image file for the compute nodes.

Install PDSH

Before we use our new kronos VNFS image, we want to install a handy package. The default installation of Warewulf did not include pdsh, which stands for parallel distributed shell. The pdsh package allows us to issue commands to all (or some) of the nodes at one time. It is only required to install the pdsh package on the master node. As an aside, pdsh is probably most useful to root. While users may find pdsh useful for getting node information, there is usually no reason why users should be running programs on the cluster using pdsh.

First download the file, pdsh-1.7-9.caos.src.rpm and rebuild the rpm using the command rpmbuild --rebuild pdsh-1.7-9.caos.src.rpm. Install the binary rpm (look in /usr/src/redhat/RPMS/i386) on the master node using rpm -i pdsh-1.7-9.caos.i386.rpm .

The pdsh package needs to know the node names of you cluster. These names can be on the command line or in a file. For convenience, let's create a file call kronos-nodes in /etc/warewulf that lists the nodes kronos00-kronos06 (one per line). This file is a list of all the nodes in your cluster (not counting the master node).

Then, as root in your .bashrc file you should add the following:

export WCOLL=/etc/warewulf/kronos-nodesThe WCOLL variable is used by pdsh to find the list of machines in your cluster. To test pdsh, run the following command, pdsh hostname. The output should look something like the following.

kronos00: kronos00 kronos02: kronos02 kronos04: kronos04 kronos03: kronos03 kronos01: kronos01 kronos05: kronos05 kronos06: kronos06Note that the order of output is not the order in which the nodes were specified in the kronos-nodes file. This effect is often due to the parallel nature of clusters. Many things are happening at once and delivery of results may not be in an order you expected.

Changing Modules Options on Nodes

One of the important things we will want to be able to do is change some parameters on the nodes. One of the most important is module options. We chose to create our own VNFS so we could tune the system. In particular, the Gigabit Ethernet has several module options that may come in handy. To begin, make a backup of the modprobe.con.dist file in the /vnfs/kronos/etc directory. We can then make changes to the nodes by editing the original file.

As an example, we wanted to experiment with the settings on the GigE cards. We did some experimentation with various settings for the driver. One in particular was the Interrupt Throttle Rate. We found that leaving this at a default value (8000) gave us rather high latency (~65 &mu s). However, turning off interrupt throttling cut the latency in half (~29 &mu s). Of course this means the CPU is doing more work servicing interrupts, but in some cases the lower latency is important.

To change the options for the e1000 cards (GigE) you need to modify the /vnfs/kronos/etc/modprobe.conf.dist file by adding the following line to the end of the file.

options e1000 InterruptThrottleRate=0 TxIntDelay=0And, make sure you add it to the /etc/modprobe.conf on the host as well!

For these changes to take effect on the cluster, you have to rebuild the VNFS, and reboot the nodes. Run the following commands on the master nodes to rebuild the VNFS.

# wwvnfs.build # wwmaster.dhcpd

We are now ready to reboot the nodes. Rather than pushing reset buttons or typing a bunch of commands, let's use pdsh. Enter the following:

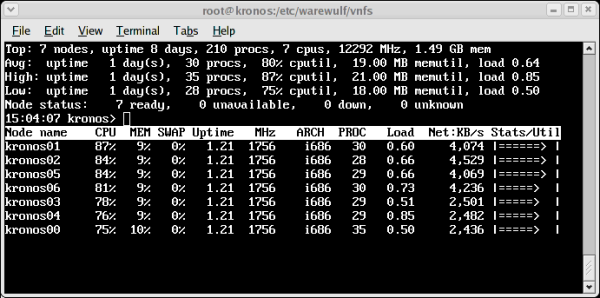

# pdsh /sbin/rebootUse the Warewulf command, wwtop (See Figure One) to check that the nodes have come back up. When nodes are off or unreachable you will see a "DOWN" listed the "Stats/Util" column. As nodes reboot the "DOWN" should change to "NOACCESS". When all the nodes are showing "NOACCESS" the cluster is ready for user accounts. Run the command, wwnode.sync to push the accounts to all of the nodes. Note, on kronos we have found that some nodes seem to take a long time to reboot as they get stuck in the shutdown process. We're looking into this problem. If this happens you can press the reset button on the node as there is no penalty for not shutting down the filesystem.

It is also most effective if you make the same module changes for the master node. Reloading the e1000 module on the master node can be accomplished by issuing the following commands (change eth1 to reflect your cluster interconnect).

# ifdown eth1 # rmmod e1000 # modprobe e1000 # ifup eth1Also, we do not want to twiddle with the on-board Fast Ethernet service network as it is used to boot and manage the nodes.

To check the Ethernet module options on the master node, run dmesg | grep e1000. The command will give all of the details about the GigE NIC (Intel e1000 NIC) that are in the dmesg file. The new setting should be noted in this file. To check the Ethernet module settings on the compute nodes, run pdsh dmesg | grep e1000 command using pdsh.

At some point you may want to change the MTU (size of the Ethernet packet) To accomplish this step, first change the MTU line in the files /vnfs/kronos/etc/sysconfig/network-scripts/ifcfg-eth1 and /etc/sysconfig/network-scripts/ifcfg-eth1 to the value that you want. The first file is for the VNFS and the second is for the master node. Note that the kronos VNFS ifcfg-eth1 file may not exist. Just create the file and add the MTU setting as such as:

MTU=6000Note, MTU sizes can normally range from 1500 (default) to 9000 bytes. The easiest way for this to take effect is to rebuild the VNFS and reboot nodes as we did a above. Then reset eth1 on the master node.

# ifdown eth1 # ifup eth1After this, you can reboot the compute nodes using pdsh (see above) and watch the progress using wwtop. Don't forget to enter wwnode.sync when the nodes are ready (showing "NOACCESS"). The nodes should be back up and ready for production. You can also check the MTU setting on the master node by issuing a ifconfig eth1 | grep MTU and on the compute nodes by issuing pdsh /sbin/ifconfig eth1 | grep MTU.

These two exercises should give you feel for making changes to both the master and the nodes (through changing the VNFS).

Sun Grid Engine

Since we share kronos, we also chose to install a queuing/scheduling system to avoid stepping on each other's toes. We chose to install Sun Grid Engine. We needed to patch SGE to work with Fedora Core 2, so you can download a newer version from the Kronos download page.Before we install SGE, we need to install korn-shell (ksh) since SGE requires it. You can grab a fairly recent copy off the web, your Fedora CDs, or simply use yum.

# yum install pdksh

Next install the Grid Engine package that you downloaded from Cluster Monkey:

# rpm -i gridengine-5.3p6-8.caos.i386.rpm

If you are not using FC2, you may want to rebuild the RPM. Source RPMs are available on the Kronos download page as well.

The installation of SGE will print out a bunch of diagnostic code. SGE requires some further customization before it can be used. Fortunately, it includes a script to handle this procedure. Move to the /sge directory and enter the following command.

# ./inst_sge -m -fastYou can select the default answers for most of the questions. When you come to the "Adding Grid Engine hosts" you will be required to supply a file or manually enter the names of the hosts. It is probably convenient to create a list of nodes in file for this purpose (open another terminal to make the list). There is one thing to consider at this point, however. If you want to include the master node as an execution host (meaning it will be used to run programs) then include it in the list (use the name kronos). If you do not, then the list should contain only the seven node names (kronos00-kronos06). After entering your nodes, there are a few more questions to answer (default answers will suffice).

SGE is large package with plenty of documentation. Consult the Resources Sidebar for more information. There is also a graphical font end called qmon that you can use to change some of the settings and add nodes.

Installing SGE on the Nodes

The previous section only installs SGE on the master node (the hard part). We also have to install a small piece of SGE on the compute nodes. However, like many things, it requires some RPMs to be installed first.SGE on the compute nodes requires the strings command installed on the nodes. This program comes with the package binutils. You can use wget to grab the rpm.

# wget http://download.fedora.redhat.com\

/pub/fedora/linux/core/2/i386/os/Fedora\

/RPMS/binutils-2.15.90.0.3-5.i386.rpm

A couple of notes are in order. First, we are not using Yum because we do not want to install this on the master node (it may be installed already). So we want to download the rpm from the Fedora site. Second, check the download site. Fedora Core 2 was about to switch over to the Fedora-Legacy project (which means the URL may change) as this article was being written.

Next we need to install the rpms for binutils and Grid Engine into the Kronos VNFS. Execution nodes use a separate rpm for Grid Engine as they do not need all the files on the head node. Grab the gridengine-node rpm from the Kronos download page. There are a couple of ways to do this, but we're going to use the --root option of the rpm command to install it in the correct location.

# rpm -i --root /vnfs/kronos/ binutils-2.15.90.0.3-5.i386.rpm # rpm -i --root /vnfs/kronos/ gridengine-node-5.3p6-7.caos.i386.rpmNote that we have used the --root option for rpm to define a new root for the install path. You can also administer (add/delete/query) the VNFS rpms by using the --root option as well. We have bintuils and SGE for the nodes installed in the Kronos VNFS (getting closer!). To save space on the nodes, the SGE rpm that is installed on the nodes doesn't include the basic SGE commands that we might need. So, we're going to NFS export the directory /sge to the compute nodes.

First, go to the file, /etc/exports on the master node and add the following line to the end of the file.

/sge 10.0.0.0/255.255.255.0(rw,no_root_squash)Then enter the command exportfs -r which will reexport all of the directories listed in /etc/exports. Next, go to the file /etc/warewulf/vnfs/fstab and uncomment the following line.

#%{sharedfs ipaddr}:/sge /sge nfs rw,bg,rsize=8192,wsize=8192 0 0

To uncomment it you just remove the "#" character.

We also need to slightly modify what files that get excluded when the

VNFS is built (it makes the ramdisk image smaller). This task is

accomplished by using a "VNFS excludes" file. We are using the

excludes-aggressive to keep out ramdisk size as small as possible.

In the file /etc/warewulf/vnfs/excludes-aggressive add the

following line.

+ usr/lib/libbfd-2.15.90.0.3.soAfter, this line

usr/lib/libpopt.[^a]*Finally, we're ready to rebuild our Kronos VNFS! As before, enter the commands to rebuild the VNFS, reboot, and distribute the user accounts.

Final SGE Configuration

After the compute nodes come back up (you can use wwtop to check this) and you have used the wwnode.sync command to propagate the accounts to the nodes, go to the /sge/utils directory on the master node. Run the following command.

| Sidebar Two: What No Ganglia ? |

| Ganglia is often associated with administering a cluster. While Ganglia is a great package, it can be a bit resource intensive. We prefer to use lightweight "top like" tools to check on the cluster status. Both wwtop and userstat are great tools to watch the work queue and loads on the cluster with minimum overhead. But, don't worry, we'll provide instruction on how to install Ganglia in the future -- or maybe something better. |

# ./install_cluster.sh kronos00 kronos01 \ kronos02 kronos03 kronos04 kronos05 \ kronos06This command will configure SGE to allow kronos00 to konos06 to act as "execution" hosts that will run actual jobs. You can check to see SGE finds all you nodes by running qhost and inspecting the output. If you are not seeing your nodes, check the value cluster web page and the SGE documentation for more information. If you want to allow the master node to also run jobs, then you need to run the following command on the master node.

# ./install_execd -fast -autoYou will also need to install the rsh-server rpm on the master to allow an rsh connections from the nodes. We will address this below.

Install UserStat

If you have every used a batch scheduler, there is usually some kind of qstat (queue status) command. Usually these utilities are command line programs that provide a snap shot of the queue. To make this kind of information more accessible, there is a nice "top like" utility for viewing SGE queue status and processor loads. If you download the userstat rpm from the a href="http://www.clustermonkey.net/download/kronos/">Kronos download page, you only need to install it on the master node (rpm userstat-1.0-2.i386.rpm).

While you are at it, you may wish to download the sge-tests.tgz tar file from the Kronos download page (in the src directory). This file contains some simple test scripts for SGE. Extract the file in a working directory and consult the README.

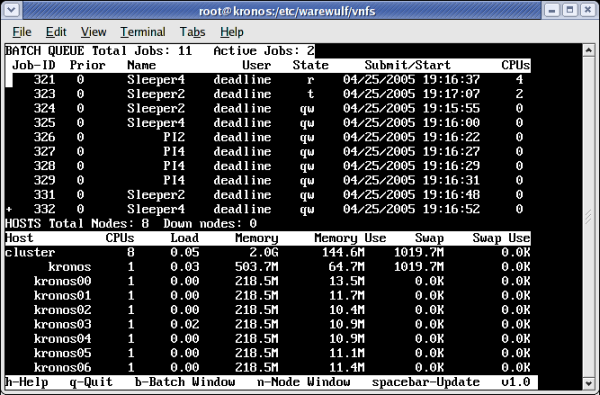

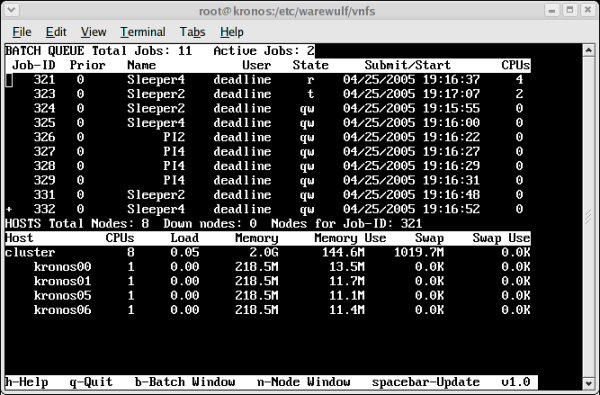

Userstat is helpful in two ways. First as shown in Figure Two, it provides (in the top of the window) a list of jobs in the queue (running and waiting). The lower window shows the load and memory usage for the cluster. If you highlight a running job in the top window and press enter, only those nodes that are used by the job are shown in the lower window. An example of this this display is shown in Figure Three. Userstat is designed for both administrators and users. Indeed, it provides a very low overhead way for users to watch their jobs.

Install LAM

At this point, we hope we haven't worn you out. We have the VNFS tuned to where we want it and we have installed and tested SGE for queuing/scheduling jobs. It might be a good thing to install an MPI since we are going to want to run codes on the cluster. We chose to install LAM/MPI in the cluster.Grab a copy of LAM from the Warewulf download site and build the binary rpms using the command rpmbuild --build lam-7.0.6-7.caos.src.rpm.

This process will create a number of rpms that should be installed on the master node as follows (look in /usr/src/redhat/RPMS/i386 for the files).

# rpm -i lam-7.0.6-7.caos.i386.rpm # rpm -i lam-devel-7.0.6-7.caos.i386.rpm # rpm -i lam-docs-7.0.6-7.caos.i386.rpm # rpm -i lam-extras-7.0.6-7.caos.i386.rpm

The installation on the nodes is a bit simpler. As before, we will install directly to the kronos VNFS.

rpm -i --root /vnfs/kronos/ lam-7.0.6-7.caos.i386.rpmAgain, since we changed the VNFS, we need to build and reboot the nodes with the new image (see above).

Testing LAM/MPI

Not that LAM is installed, let's try testing it to make sure it's installed correctly. Since it's always good to do testing as a user and not as root, make sure you have created a user account on the master node. If not, create one now. After you create a user account, be sure to propagate (as root) the account information to all of the nodes using wwnode.sync command.

Log in as the user and create a subdirectory for testing LAM. Download the lam-tests.tgz tar file from the Kronos download page (look in the src directory). After you have extracted the file, check the lam-test/pi/README for instructions on how to compile and run both C and Fortran MPI programs. Also note the the Fortran programs must be linked as "static" because not all the required libraries are in the VNFS.

For these tests, we're going to run the code outside of SGE, so we need to create the file, lamnodes with a list of nodes to be used for the run. On way to do this is to run the following command.

wwnode.list -r -q > lamnodesHowever, this only gets you a list of the compute nodes. If you want to use the master node as part of the LAM network, edit the file to add kronos to the beginning of the file.

Integrating LAM into SGE

In order to keep our ramdisk small we used the excludes-aggressive file. The keyword here is "aggressive". We need to include a few libraries for LAM that were excluded in our ramdisk. Open up the /etc/warewulf/vnfs/excludes-aggressive file and add the following

+ usr/lib/libkrb4.so.* + usr/lib/libkrb5.so.* + usr/lib/libdes425.so.* + usr/lib/libk5crypto.so.*After, this line

usr/lib/libpopt.[^a]*

While we are at it, lets get rid of the unused kerberos files. Just add the line:

/usr/kerberosto the end of the vnfs/excludes-aggressive file. The one other thing we need to do is to add rsh to the nodes. For some reasons the VNFS did not include rsh, which is needed by LAM/MPI when it is run by SGE. (SGE may start LAM jobs on a subset of the worker nodes which does not include the master node. LAM needs rsh to start its daemons on remote nodes. When we ran LAM on the master node, which has rsh installed, it was able to start daemons on any node.) You can grab the rpm from the Fedora Cores site by using the following command.

# wget -c http://download.fedora.redhat.com/pub/ \ fedora/linux/core/2/i386/os/Fedora/ \ RPMS/rsh-0.17-21.i386.rpm .Then, as before install it in the kronos VNFS.

rpm -i --root /vnfs/kronos/ rsh-0.17-21.i386.rpmOf course you need to rebuild the VNFS and reboot the nodes again. If you would like to use the master node as a SGE execution node, then you will need to install and configure rsh-server rpm. Finally, the lam-tests.tgz (see above) has some SGE tests that can be used to test LAM and SGE integration. See the lam-test/sge/README file. Also, be sure to look at the SGE submission scripts for more information.

Beowulf Performance Suite

Now that we have the essential programs installed and running, we can give our cluster the once over. Download the Beowulf Performance Suite bps-1.3-1.i386.rpm from the Kronos download page. The bps package needs two other packages (gnuplot and expect). Simply use Yum to install these packages and then install the bps rpm.

# yum install gnuplot # yum install expect # rpm -i bps-1.3-1.i386.rpm

The bps package is a collection of tests for a cluster. It ranges from memory bandwidth tests to the NAS parallel benchmark suite. You can get information on how to use the bps suite from A Tool for Cluster Performance Tuning and Optimization. The results for Kronos are here. We will have more next time on how to run these and other programs. And yes, we will be describing how to run HPL the Top500 benchmark.

| Sidebar Resources |

| Kronos Downloads Page |

| Warewulf |

| PDSH |

| LAM-MPI |

| Sun Grid Engine |

| Sun Grid Engine for Warewulf |

| Intel Gigabit Adapter Optimization |

Douglas Eadline is the head Monkey at Clustermonkeys and was the editor of ClusterWorld Magazine. Jeffrey Layton is Doug's loyal sidekick and works for Linux Networx during the day and fights cluster crime at night.