Nested Strided

A related spatial access pattern to simple strided is the nested strided access pattern. It accounts for about 33% of the codes examined for parallel I/O. As with the simple strided spatial access pattern, the following patterns temporal patterns were examined: "Nested Strided (read)", "Nested Strided (write)", "Nested Strided (re-read)", "Nested Strided (re-write)", and "Nested Strided (read-modify-write)". The input file is very similar to the simple strided case.

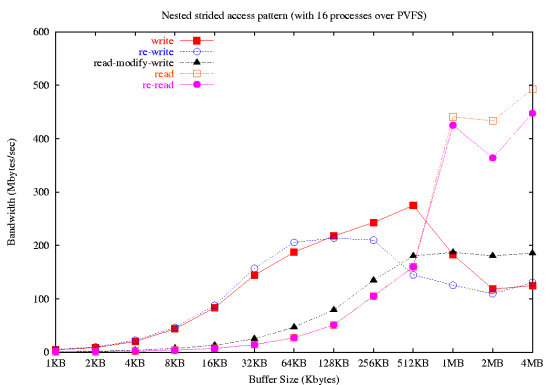

The results for the nested strided case can be seen in Figure Two which plots Bandwidth (MB/sec) versus buffer size (KB).

The shape of the graph is about the same as the simple strided access pattern. However, the magnitude of the numbers is smaller.

Unstructured Mesh

The unstructured mesh access pattern is one that many CFD (Computational Fluid Dynamics) codes as well as terrain mapping codes use. For this spatial access pattern, only two of the temporal access patterns were tested: "Unstructured Mesh (read)" and "Unstructured Mesh (write)".

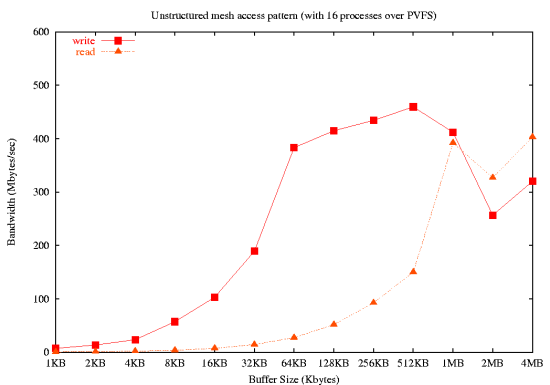

The PIObench results can be seen in Figure Three that shows bandwidth in MB/sec versus the buffer size in KB.

In this case, the buffer size corresponds to the number of vertices

as well as the number of values per vertex (ValuesPerRecord in the

input file).

At each vertex, the values are packed into a contiguous buffer. So varying the number of vertices or the number of values per vertex just increases the amount of contiguous data to be accessed by a process. This can be easily accommodated by using MPI-IO and file views and collective I/O.

The general shape of the curve is similar to the simple strided access pattern. The write case peaks at about 450 MB/sec using a 512KB buffer size. The read case peaks at about 400 MB/sec for a buffer size of 1 MB and greater.

What Next?

It is my hope that PIObench sees more widespread use. The flexibility is and shear number of parameters make it an ideal benchmark. The ClusterMonkey Benchmark Project (CMBP) will be using PIObench as part of the benchmark collection.

If you run PIObench on your parallel file system I would appreciate an email discussing your experience with it and possibly some results.

| Sidebar One: Links Mentioned in Column |

|

Frank Shorter's Thesis "Design and Analysis of a Performance Evaluation Standard for Parallel File" |

This article was originally published in ClusterWorld Magazine. It has been updated and formatted for the web. If you want to read more about HPC clusters and Linux you may wish to visit Linux Magazine.

Dr. Jeff Layton hopes to someday have a 20 TB file system in his home computer (donations gladly accepted). He can sometimes be found lounging at a nearby Fry's, dreaming of hardware and drinking coffee (but never during working hours).