Ever hear of an exabyte?

In our second part of File Systems O'Plenty we take a look at NAS, Distributed File Systems, AoE, iSCSI, and Parallel File Systems. In case you missed part one you can find it here. In this part, we will also point out why IO is important in HPC clustering. Many a CPU cycle is wasted waiting for that data block. Read on how to feed you data appetite.

[Editors note: This article represents the second in a series on cluster file systems (See Part One: The Basics, Taxonomy and NFS and Part Three: Object Based Storage). As the technology continues to change, Jeff has done his best to "snap shot" the state of the art. If products are missing or specifications are out of date, then please contact Jeff or myself. In addition,, because the article is in three parts, we will continue the figure numbering from part one. Personally, I want to stress magnitude and difficulty of this undertaking and thank Jeff for amount of work he put into this series.]

NAS

In the previous section I talked about NFS including its history and recent developments, but it is just a protocol and a file system. Vendors have taken NFS and put storage behind it to create a device that is commonly called a NAS (Network Attached Storage) device. Figure Five shows you the basic layout of a NAS device.

The compute nodes at the top connect to a NAS Head, sometimes called a filer head, through some type of network. The filer head is attached to some kind of storage. The storage is in the device itself or attached to the filer head, possibly through SAN storage or through some other network storage device such as iSCSI or HyperSCSI. The filer head exports the storage at the file level typically using NFS or in some cases, CIFS (usually for compatibility with desktops). There are a large number of manufacturers of NAS systems. For example,

You can even create your own NAS system using a simple PC running Linux and stuffing it full of disks. Many people have done this before (I have done it in my own home system). You can use software RAID or hardware RAID with the disks and then use whatever network connection you want to connect the NAS to the network. Using NFS or CIFS (Samba) allows you to export the file system and mount it on the clients. The only cost is the hardware and your time since NFS is part of Linux and is a standard (the fact that it's a standard is not to be understated). However, home NAS boxes may not be the best solution for larger clusters.While NAS devices are "easy" in the sense that they have been around for a long time and are easy to understand, easy to manage, and easy to debug, they are not without their problems and limitations. The first limitation is that a simple single NAS can only scale to a moderate number of clients (10's or at most 100's of clients) before performance becomes so poor that you're better offer using smoke signals or drums to send data. Second, the scalability in terms of capacity is somewhat limited. In the case of a small NAS box (or one that you put together), you are limited to how many drives you can stuff into a single box. If you use something like LVM, then you can add storage at a later date and expand the file system to use it. With 1TB hard drives here, you should be able to create a very large file system on the NAS (for example, it should be fairly easy to put about 8-10 drives in a single case which will give you on the order of 8-10 TB's of raw storage if you use 1 TB drives (at last! Room enough for my K.C. and the Sunshine Band MP3s!). But, heed the warning about data corruption that I previously discussed.

To help improve NAS devices, NAS vendors have developed large, robust storage systems within their offerings. They have taken the basic NAS concept and put some serious hardware behind it. The have support for large file systems. They have also developed hardware that helps improve the storage performance behind the filer head allowing more clients to use the storage. For the most part NAS vendors use a single GigE or multiple GigE lines from the filer head to the client network. This limited network connection to the clients, restricts the amount of data they can push from the filer head to the clients. With the advent of NFS-RDMA, as discussed previously, it should be possible to push even more data from a single filer head (Mellanox is saying that you can get 1.3 GB/s reads and 600 MB/s writes from a single NFS/RDMA filer head), but you have to have an InfiniBand network to the clients. Despite the increased IO performance from NFS-RDMA, there is still a limit on the total amount of data than can be sent/received from a single filer head.

In addition to be limited in performance scaling, NAS devices are also limited in capacity scaling. If you run out of capacity (space) NAS devices can be expanded if you are below the capacity limit of the device. But if you hit that limit then you cannot expand the capacity. In that case, you have to add another NAS device that is separate from the current NAS device(s). But, the file systems on all of the NAS devices cannot be combined into a single file system. So now you have these "islands" of storage. What do you do?

One option is to arrange the NFS mounts on the client nodes to make it "appear" as a single file system. In essence you have to "nest" the file systems. But these tricks can be risky and if one NAS device isn't available you typically cannot get to the other devices. This can also lead to a load imbalance.

The other option is to split the load across several filer heads. You can split the load based on the number of users, the activity level of the users, the number of jobs, the amount of storage, or just about any combination you can think of to help load balance the storage. Even if you plan ahead when you get your first NAS device, you will find that how you planned to split the load will still result in a load imbalance. The fundamental reason is that the load, the number of users, the size of the projects, etc. will change over time and once you have planned the load distribution, you can't change it. At this point one option is to take down the storage, move the data around to better load balance, and then bring the system back up. If the users don't surround your desk armed with pitch forks and torches, this process can work. However, you may have to do it fairly often depending upon how the load changes and how fast you can run.

Now that I've exposed the warts of NAS devices, the next logical questions is when should you use them and when should you not. I'll give you my stock answer - it depends. You need to look at 3 things: (1) Size of the cluster, (2) IO requirements of the application(s), and (3) capacity requirements. If the size of your cluster isn't too big so that the NAS device can support all of the clients, then you've satisfied the first requirement. I've seen clusters as large as several hundred nodes that can be effectively served by a NAS box.

The second requirement is the IO needs of your applications. You need to know how much IO your applications require for good performance (you can define "good" however you want). For example, if you need 20 MB/s from 20 nodes to maintain a certain level of performance then you need to make sure the NAS box can deliver this amount of IO throughput. The interesting thing is that I've seen people who have absolutely no idea how much IO their application needs to maintain a certain level of performance yet they will specify a certain amount of IO performance in their cluster. I have also seen people who think they know the IO requirements of their codes but they actually don't, and they also specify the amount of IO for their cluster. You would be surprised by the number of applications that don't need much IO or don't need the global IO that NAS devices provide. I highly recommend taking some time to test your applications and learn their IO requirements and patterns.

And finally, if you think your overall capacity requirements are below the maximum that the NAS devices offer, then it should work well. But be sure to estimate your storage capacity for the life of the machine (about 3 years). Also, remember to factor in that the amount that storage increases every year.

I hate to give you rules of thumb for all 3 requirements for NAS usage because as soon as I do, someone will come up with a counter example that proves me wrong. I have my own rules of thumb for a range of applications and so far they have worked well. But there are some application areas I don't know as well so I'm not sure if my rules will apply or not. I highly advise that you learn your applications and develop your on rules of thumb. When you do, please send me some email (address is at the end of the article) and tell me about your rules of thumb.

Before I finished with NAS devices, I wanted to give a short summary with some pros and cons for them.

- Pros:

- Easy to configure, manage (Plug and Play for the most part)

- Well understood (easy to debug)

- Client comes with every version of Linux

- Client is free

- Can be cost effective

- Provides enough IO for many applications

- May be enough capacity for your needs

- Cons:

- Limited aggregate performance

- Limited capacity scalability

- May not provide enough capacity

- Potential load imbalance (if use multiple NAS devices)

- "Islands" of storage are created if you have multiple NAS devices

If it looks like a simple NAS box won't meet your requirements, the next section will present an approach to NAS that tries to improve the load imbalance problem and to help the scalability.

Clustered NAS

Since NAS boxes only have a single server (single filer head), Clustered NAS systems were developed to make NAS systems more scalable and to give them more performance. A Clustered NAS uses several filer heads instead of a single one. Typically either the filer heads are connected to storage via a private network or the storage may be directly attached to each filer head.

There are two primary architectures for Clustered NAS systems. In the first architecture, there are several filer heads that have some storage assigned to them. The other filer heads cannot access data not associated with their filer head, but all of the filer heads know which one has which data. When a data request from a client it comes into a filer head. The filer head determines where the data is located (which filer head). Then it contacts the filer head that owns the data using a private storage network. The filer head that owns the data retrieves the data and sends it over the private storage network to the originating filer head which then sends the data to the client.

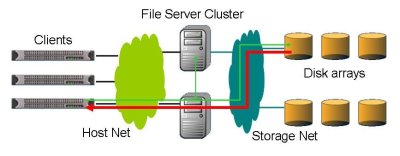

Figure Six below, illustrates this process of getting data to a client in a Clustered NAS environment.

The green line represents the data request from the client. It goes to the filer head that it has mounted. That filer head checks if the requested data is in its attached storage. In this case it is not, so it forwards the request to the filer head that owns the data. This filer head then retrieves the data and sends it back to the originating filer head (the red line). The originating filer head then sends the data back to the client. This process is true whether the data function is a read or a write.

This Clustered NAS architecture, sometimes called a forwarding model, was one of the first clustered NAS approaches. It was a fairly easy approach to develop since it's really several single head NAS devices that know about the data that are owned by each filer head. The metadata needs to be modified so that each filer head knows where the data is located. Basically an NFS data request is made from the originating filer head to the filer head that owns the requested data. The data is returned to the originating filer head which then forwards it to the requesting client. Figure Seven below illustrates this process from a file perspective (don't forget that NFS views data as files).

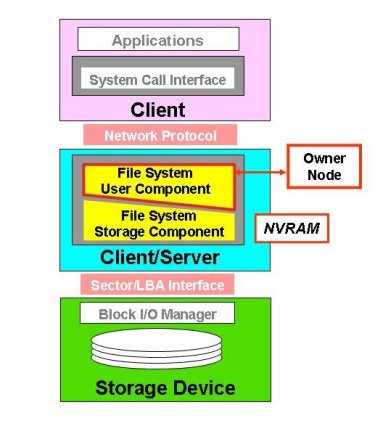

In Figure Seven, the client requests 3 files (the triangles). The files are placed on two different filer heads. So the data requests are fulfilled by two different filer heads. But, regardless, all of the data has to be sent through the originating filer head and could limit performance. Also notice that this is still an in-band data model, as shown in Figure Eight below.

Recall that in an in-band data model, the data has to flow through a single point including metadata. But in the forwarding model, when the client request gets to the user component of the file system layer the metadata is checked for the location of the data. Then the data request is sent to that filer head (called a node in Figure Eight). However, the stack is still an inband model where all of the data has to flow through a single server.

In a second architecture, sometimes called a Hybrid architecture, the filer heads are really gateways from the clients to a parallel file system. For these types of systems, there are filer heads (gateways) that communicate with the client using NFS over the client network but access the parallel file system on a private storage network. The gateways may or may have storage attached to them depending upon the specifics of the system. Figure Nine below illustrates the data flow process for this architecture.

In this model, the client makes a data request that is sent to a file server node which is really a gateway node. The gateway node then gathers the data from the storage on behalf of the requesting client (this is shown as the green line in Figure Nine). Once all of the data is assembled for the client, it is sent back to the client from the originating gateway node (this is show in red in Figure Nine). Contrast this figure with Figure Six and you can see how the hybrid model differs from the forwarding model.

The Hybrid Architecture of a Clustered NAS is a bit different from the forwarding architecture from the perspective of the storage as well. Figure Ten below shows how the data are stored and how it flows through the storage system.

The client requests the data from one of the file servers. The originating file server than gets the data from the storage, assembles it, and then returns it to the requesting client. But there is a significant difference in how the data is stored in this architecture. The data is actually stored on storage hardware that is not necessarily assigned to one of the file servers. You can see this in Figure Ten. The data are distributed in pieces across the storage, unlike the forwarding model where the entire file was stored with one file server or another.

A benefit of the Hybrid architecture is that it allows the originating file server to retrieve the requested data in parallel, speeding the data retrieval operation, particularly compared to the Forwarding Architecture. But, the file servers still send data to the clients using NFS and most likely using GigE. So the client performance is still limited by their NFS performance.

The Hybrid architecture has another benefit in that the file system capacity can be scaled fairly large by just adding storage servers to the storage network. In addition, you can gain aggregate performance by just adding more gateways to the file system (basically you are adding more file servers).

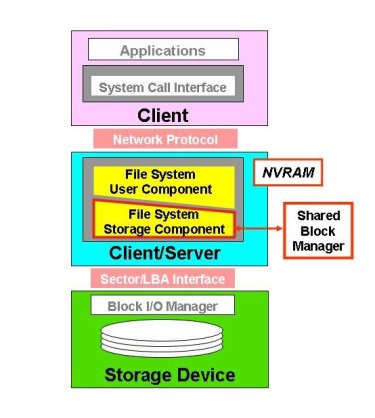

However, despite the ability to scale capacity and to scale aggregate performance there are a few difficulties. Figure Eleven below is the protocol stack for the hybrid model.

The data requests from the client come down the stack until they reach the File System Storage Component layer. Then the data request is sent through a shared block manager so that the data can be retrieved from the various storage devices. Then the data is sent back up the stack to the client.

From Figure 11 you can see that the Hybrid architecture is still an in-band architecture with data that has to flow through a single server to the client. While the Hybrid Clustered NAS can scale performance and capacity there are still limits to performance. Each client only communicates with one server. Consequently, there is no ability to introduce parallelism between the client and the data. There is parallelism from the file server to the file system, but there is still a bottleneck from the file server to the client. More over, you are still using NFS to communicate with the client and the file server (gateway). This limits the possible performance of the client to approximately ~90-100 MB/s per client over GigE. However, if you have fewer gateways than clients, this number is reduced because you have multiple clients contacting a single gateway. For example, if you have 128 clients and only 12 gateways and the clients are all performing IO, then the best per client bandwidth when all clients are performing IO is approximately 9.4 MB/s. The only way to improve performance is to greatly increase the number of gateways, increasing costs. On the plus side, many applications don't need much IO performance so a low gateway/client ratio may work well enough.

There are some inherent difficulties in a hybrid architecture though. Most of them are due to the design decisions in a Hybrid Clustered NAS. For example, the storage layer must synchronize the block-level access among the gateways that share the file system. This requirement means that there will be a high level of traffic on the storage network and the gateways will have to spend a fair amount of time on the synchronization as there are a large number of blocks to handle. On a 500GB device, there are about 1 Billion 512-byte sectors. On 50TB, there are 100 Billion sectors. On a 500TB system there about 1 Trillion sectors. And one final difficulty is that the low-level interface imposes more overhead in a distributed system. For example a write() system call involves several block-level IO operations because the storage is distributed and the metadata has to be updated globally. This can restrict write traffic.

But on the bright side, there is a very "narrow" interface around block ownership and allocation. This interface is some restricted but it's fairly easy to modify existing local file systems into a parallel file system or a Clustered NAS. For example XFS was used to create cXFS. Terrascale, now called Rapidscale, initially did this with ext3, and then XFS.

As with the simple NAS device, I want to summarize the Clustered NAS, whether it use a Forwarding architecture or a Hybrid architecture, with some pros and cons:

- Pros:

- Usually a much more scalable file system than other NAS models

- Only one file server is used for the data flow (forwarding model could potentially use all of the file servers)

- Uses NFS as protocol between client and file server (gateway)

- Many applications don't need large amounts of IO for good performance (can use a low gateway/client ratio)

- Cons:

- Can have scalability problems (block allocation and write traffic)

- Load balancing problems

- Need a high gateway/client ratio for good performance

Now that we've explored the two types of Clustered NAS devices, let's explore some of the specific vendor offerings for both types.