Benchmarking Parallel File Systems

In the last column I spent quite a bit of time explaining the design of a new set of benchmarks for parallel file systems called PIObench. I discussed critical topics such as timing, memory access patterns, disk access patters, and usage patterns. Due to constraints on how much I can write in a single column, I didn't talk about some other items such as the influence of disk caching. I won't talk about these in this column either. For those interested in things of this nature, take a look at Frank Shorter's PIObench thesis.

In this article I want to talk about building and using PIObench and actually test a parallel file system.

Building PIObench

PIObench is available from the PARL website. It is very easy to build and the README has excellent instructions. If you're having trouble with the README, the Makefile is very simple and clear. The only requirement to build PIObench is to have mpicc built and installed. This means you need an MPI built and installed. More specifically, you need an MPI that is MPI-2 conforming or at least one that has the MPI-IO support.

To build the code you just do the following:

% tar -xzf pio-bench.tar.gz % makeThe resulting code is called pio-bench.

Inputs to PIObench

Recall that the PIObench benchmark, as currently written, has a number of common spatial access patterns. These patterns are simple strided, nested strided, random strided, sequential access, segmented access, tiled, flash I/O kernel, and unstructured mesh. The benchmark has a fairly simple "language" that allows you to specify what patterns you want to run and what parameters the pattern needs. The benchmark looks for a configuration file called, oddly enough, pio-bench.conf. The benchmark then parsers the configuration, which consists of directives in the "language" and runs the tests.

The language closely resembles apache-style configuration files. The PIObench code uses the Dotconf parser. The language is used for specifying the timing configuration as well as the configuration of the specific access pattern. There are also global configuration parameters than are set using the same configuration file format.

There are six possible global timing directives: TestFile, ShowAverage, ReportOpenTime, ReportCloseTime, ReportHeaderOffset, and SyncWrites. These are placed at the top of the configuration file. I won't go over these directives in this column. The details are in Frank's thesis on the PARL website (see the sidebar for more information).

After specifying the global directives at the top of the input file,

you can use the access pattern module directives. These are fairly

simple:

The directive ModuleName is specific to the access patterns that have been compiled into the benchmark.

An example of an overall configuration file is in Listing One.

# Example configuration file for PIObench TestFile "/mnt/pvfs/file_under_test"Comments can be made by using the pound symbol "#" before a statement.ModuleName "Simple Strided(write)" ModuleReps 100 ModuleSettleTime 30 ModuleName "Nested Strided(re-write)" ModuleReps 50 ModuleSettleTime 10

You have to run the pio-bench code using the appropriate launcher

based on what MPI implementation you used. For example if you used

MPICH, you could use either C

Outputs

The outputs from PIObench are very simple. The output will give you details about what access pattern was used, what spatial pattern was used, the resulting operations performed, the time it took, and finally, the bandwidth.

Test Machine

In Frank's thesis, he presents some very comprehensive testing of a parallel file system. He ran the tests on a 16 node development cluster. Each node had dual Intel Pentium III/1 GHz processors with 1 GB of memory, two Maxtor 30 GB hard drives, an Intel Express Pro 10/100 Fast Ethernet card, and a high speed Myrinet network card. Each node was running Red Hat 8.0 with a 2.4.20 kernel. The cluster was also using PVFS (Parallel Virtual File System) 1.5.7.The cluster had four mounted file systems. Two file systems were using all 16 nodes as part of PVFS (16 IOD's). One of them used Myrinet as the interconnect and one used Fast Ethernet. The other two file systems only used 4 of the nodes for another two PVFS file systems (4 IOD's). The master node of the cluster was the MGR (meta data manager) for all four PVFS file systems. The stripe size for PVFS was set to 64 KB (kilobytes) and MPICH 1.2.5.1a was used for all of testing.

The tests were conducted a few years ago so the hardware and software are a bit old. None the less, the results indicate the usefulness of the PIObenchmark in measuring the performance of a parallel file system. It also illustrates the ease at which you can run many types of access patterns.

Test Inputs

Each of the tests were run 50 times (directive ModuleReps 50 in the module directive section of the input file). The defaults were used for the specific spatial access patterns except for the buffer size and the number of work units.

The total amount of data transferred during a test is the buffer size, which is the size of a single I/O request, multiplied by the number of I/O requests (termed work units). However, Mr Shorter was interested in determining the effects of varying the size of an individual I/O request, so the results are plotted versus buffer size rather than the total amount of data transferred.

Results

Mr Shorter's thesis had a great many results, exercising each of the access patterns that are built into the current version of PIObench. I won't discuss all of them since I don't have the room. However, I'd like to discuss three of them: Simple-Strided, Nested-Strided, and Unstructured Mesh.

For each spatial access pattern, the tests were run 50 times and the resulting time was averaged. The amount of data in an I/O request, termed the buffer size, was varied from a small number to a larger number and the number of work units was held constant. The resulting bandwidth was computed and plotted versus buffer size.

Simple Strided

The first pattern considered is the Simple Strided since it comprises about 66% of codes that use parallel I/O. The following patterns (ModuleName in the benchmark input file) were examined: "Simple Strided (read)", "Simple Strided (write)", "Simple Strided (re-read)", "Simple Strided (re-write)", and "Simple Strided (read-modify-write)". These are the five temporal access patterns discussed in the previous column.

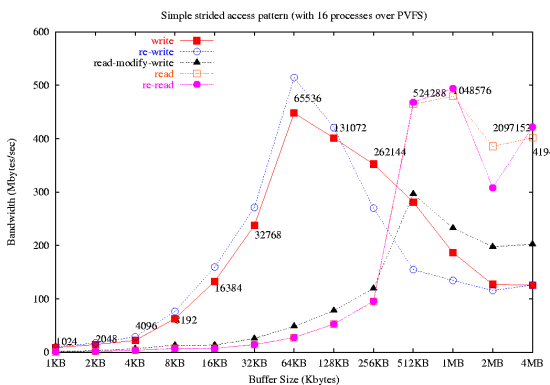

The results for the simple strided spatial access pattern can be seen in Figure One.

Notice in the graph that the maximum write performance, about 450 MB/s, for this parallel file system (PVFS) was achieved with a buffer size of 64 KB (the same as the stripe size used in PVFS). Read performance peaked at about 490 MB/s with a buffer size of about 1 MB.

Nested Strided

A related spatial access pattern to simple strided is the nested strided access pattern. It accounts for about 33% of the codes examined for parallel I/O. As with the simple strided spatial access pattern, the following patterns temporal patterns were examined: "Nested Strided (read)", "Nested Strided (write)", "Nested Strided (re-read)", "Nested Strided (re-write)", and "Nested Strided (read-modify-write)". The input file is very similar to the simple strided case.

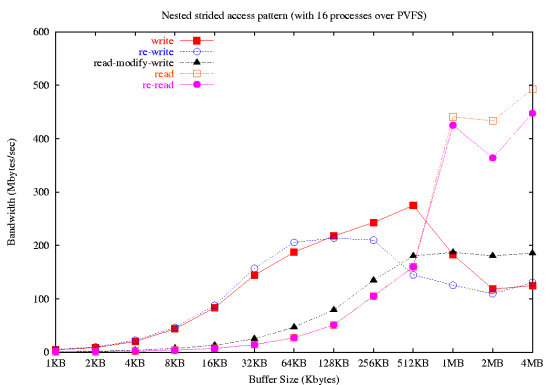

The results for the nested strided case can be seen in Figure Two which plots Bandwidth (MB/sec) versus buffer size (KB).

The shape of the graph is about the same as the simple strided access pattern. However, the magnitude of the numbers is smaller.

Unstructured Mesh

The unstructured mesh access pattern is one that many CFD (Computational Fluid Dynamics) codes as well as terrain mapping codes use. For this spatial access pattern, only two of the temporal access patterns were tested: "Unstructured Mesh (read)" and "Unstructured Mesh (write)".

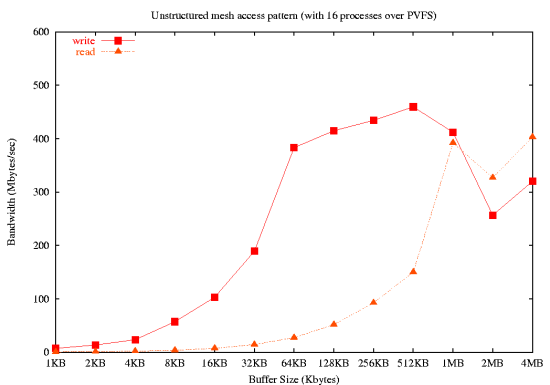

The PIObench results can be seen in Figure Three that shows bandwidth in MB/sec versus the buffer size in KB.

In this case, the buffer size corresponds to the number of vertices

as well as the number of values per vertex (ValuesPerRecord in the

input file).

At each vertex, the values are packed into a contiguous buffer. So varying the number of vertices or the number of values per vertex just increases the amount of contiguous data to be accessed by a process. This can be easily accommodated by using MPI-IO and file views and collective I/O.

The general shape of the curve is similar to the simple strided access pattern. The write case peaks at about 450 MB/sec using a 512KB buffer size. The read case peaks at about 400 MB/sec for a buffer size of 1 MB and greater.

What Next?

It is my hope that PIObench sees more widespread use. The flexibility is and shear number of parameters make it an ideal benchmark. The ClusterMonkey Benchmark Project (CMBP) will be using PIObench as part of the benchmark collection.

If you run PIObench on your parallel file system I would appreciate an email discussing your experience with it and possibly some results.

| Sidebar One: Links Mentioned in Column |

|

Frank Shorter's Thesis "Design and Analysis of a Performance Evaluation Standard for Parallel File" |

This article was originally published in ClusterWorld Magazine. It has been updated and formatted for the web. If you want to read more about HPC clusters and Linux you may wish to visit Linux Magazine.

Dr. Jeff Layton hopes to someday have a 20 TB file system in his home computer (donations gladly accepted). He can sometimes be found lounging at a nearby Fry's, dreaming of hardware and drinking coffee (but never during working hours).