Storage: its where we put things

Clusters have become the dominant type of HPC systems but that doesn't mean they aren't perfect (sounds like a Dr. Phil show doesn't it?). While you get a huge bang for the buck from them, somehow you have to get the data to and from the processors. Moreover, some applications have fairly benign IO requirements and others need really large amounts of IO. Regardless of your IO requirements you will need some type of file system for your cluster.

I wrote a file system/storage survey article for clusters in the past, but as always things change rather rapidly in the HPC arena. Originally, I had wanted to update the original article, however, the updates became so large that it's really an entirely new article. So this article, I hope, is a bit more in depth and a bit more helpful than the past file system article.

[Editors note: This article represents the first in a series on cluster file systems (See Part Two: NAS, AoE, iSCSI, and more! and Part Three: Object Based Storage). As the technology continues to change, Jeff has done his best to "snap shot" the state of the art. If products are missing or specifications are out of date, then please contact Jeff or myself. Personally, I want to stress magnitude and difficulty of this undertaking and thank Jeff for amount of work he put into this series.]

Introduction

Storage has become a very important part of clusters and is likely to become even more important as problem sizes grow. For example, the size of CFD meshes grows by almost a factor of 2 every year. As the problem size grows, the file size grows as well. Very soon the CFD solutions will become large enough that transferring them to and from user's desktop or post-processing them on a desktop will consume too much time or may not fit in memory. The best idea then is to visualize, manipulate, and post-process the results where they were created - on the cluster. CFD is but one example of the ever increasing need for more storage and higher speed storage.

In a 2007 presentation at the ISC (International Super Computer) Show in Dresden, IDC pointed out that storage and data management is growing in importance. In a survey that IDC conducted, 92% of the people who responded said they have applications that are constrained by IO. Also, 48% of the people who responded said that they were constrained by total file size. This number grew to 60% in three years. So based on the IDC study, it looks like high speed data storage, particularly parallel file systems, for clusters will become increasingly important.

This article will only be discussing distributed and parallel file systems for clusters. I won't be discussing typical Linux file systems such as XFS, ext4, ext3, ext2, Reiserfs, Reiser4, JFS, BTRFS, etc. These file systems are what I think of as local file systems. That is, the physical storage for these systems can be Direct Attached Storage (DAS) or part of a Storage Area Network (SAN). I also won't be discussing file system tools such as LVM, EVMS, md, etc. A discussion of these tools is better left to an article on local file systems.

Moreover, where possible this article will not focus on the hardware part of cluster storage, but rather, it will focus on file systems or parallel storage solutions. The hardware will only be discussed when it is part of an overall solution and can't be separated from the file system. I may disappoint people by not discussing which hard drive manufacturer is better, which type of hard drive is better (hint: SATA drives have the same "failure rate" as Fibre Channel (FC) and SCSI drives), or which RAID card is better, or which RAID level is best for certain workloads, etc. as these questions aren't really the focus of this article.

Due to the time considerations, I can only touch on a few file systems. Please don't be alarmed if your favorite is not in this article. It's not intended to dissuade anyone from considering or using that file system. Rather, it was just a choice I made to cut the size of this article and to finish it in a reasonable amount of time. I have tried to cover the popular systems, but alas popularity depends on application area as well. Finally, the discussion of a file system is not to be considered an endorsement by me or ClusterMonkey. If you think your file systems deserves more attention, by all means, contact me (my contact link is at the end of the article) and tell me how you use it and why it works for you. And, ClusterMonkey is always looking for cutting edge writers.

In an effort to scratch the proverbial IO itch, this series of articles is designed as a high level survey that just touches upon file systems (and file system issues) used by clusters. My goal is to at least give you some ideas of available file system options and some links that allow you to investigate. Anything else starts to look like a dissertation and I already did one of those. Before I start, however, I want to discuss some of the enabling technologies for high performance parallel file systems.

Enabling Technologies for Cluster File Systems

I think there are a number of enabling technologies that help make parallel file systems a much more prevalent technology in today's clusters. These technologies are:

- High-speed networks with a large bandwidth, such as InfiniBand

- Multi-core processors

- MPI2 (MPI-IO)

- NFS

You may scoff at some of these, but let me try to explain why I think they are enabling technologies. If you disagree or if you can think of other technologies please let me know.

As I discussed in another article InfiniBand (IB) has very good performance and the price has been steadily dropping. DDR (Double Data Rate) InfiniBand is now pretty much the standard for all IB systems replacing Single Data Rate (SDR). DDR IB has a theoretical bandwidth of 20 Gbps (Giga-bits per second), a latency less than 3 microseconds (depending upon how you measure it), an N/2 of about 110 bytes and a very high message rate. The price has dropped to about $1,000-$1,400 per port (average costs including switching and cables). In addition, Myrinet 10GigE is also a contender in this space as well as 10GigE (if the price ever comes down). But at the same time, most applications don't need all of that bandwidth. Even with the added communication needs of multi-core processors many applications will only use a fraction of the available bandwidth in IB or 10GigE bandwidth. To make the best of all the interconnect capability, vendors and customers are using the left over bandwidth to feed the data appetite of cluster nodes. So they are running both the computational traffic and the IO traffic over the same network.

In my opinion, another enabling technology is the multi-core processor. People may disagree with me on this issue, but let me explain why I think multi-core processors can be, in a sense, an enabling technology. The plethora of cores could be very useful in terms of IO. There may be opportunities to use one or more cores per socket to do nothing but IO. With this concept you dedicate a core per node to IO processing. This processor is also programmable (it's a CPU), and very fast compared to other processors such as those on RAID controllers or other aspects of storage. So it gives you a great deal of flexibility. I think it might be possible to dedicate one of the CPUs solely to IO processing, freeing the other cores on the system for computing.

Imagine a dual-socket, quad-core node that has a total of 8 cores. Just a few years ago you had at most 2 cores per node. That means only 2 cores had to share a Network Interface Card (NIC) or share access to local storage. Now you have 8 cores vying for the same resources. When each core performs IO you will have 8 cores trying to push data through the same NIC. But what if you could write an application where 7 of the cores did computational processing and one of the cores did all of the IO for the node. When one of the 7 cores needed to perform IO, particularly writes, they just pin the memory with the data and pass the memory address to the eighth core. Then this eighth core performs the IO while the other seven cores continue processing. When finished with the IO, the eighth core could just release the memory back to the cores. The HPC community has always talked about overlapping communication and computation. Now you can begin to talk about overlapping computation and IO.

However, while I think there is something to the idea of overlapping computation and IO, I think the most important reason multi-core processors are an enabling technology is because of the IO demand they can create. As I previously mentioned, just a few years ago we had at most two cores per node. Now we can easily have eight and soon we will have sixteen (AMD's quad-core CPU with 4 sockets per board or dual-socket Intel Nehalem CPUs with 8 cores per socket in 2008). With this many processors you may have to use a faster network so communication does not become a huge bottleneck (e.g. InfiniBand). So now you've got IB to solve your computational communication problem with lots of cores. As I previously mentioned, you can take advantage of the large amount of bandwidth that is leftover for IO. So multi-core processors could easily drive IO demand in this fashion. It's something of a backwards argument since the multi-core chips are not really driving IO requirements. But since they are driving high speed interconnects on each node, they create the opportunity to put a high-speed storage system on the same network.

In addition, with multi-core processors becoming so prevalent (soon you won't even be able to find new single core processors) you will see people start to run jobs with more and more cores. Just a couple of years ago you would have two cores per node and run, perhaps on 8-16 nodes, for a total of 16-32 processors. Now with 8-16 nodes you can get 64-128 cores for about the same hardware price. People will want to run their jobs across more processors and they are going to want to run larger problems. Larger problems and problems across more cores can lead to more IO being required. So again, multi-core processors could help drive up IO requirements.

I think another important enabling technology is MPI-2 which now includes something called MPI-IO. This is an addition to the MPI standard that covers IO functions. Prior to MPI-2, writing IO in a high-speed and portable manner was very ad-hoc. You could use normal POSIX functions but this was difficult to do effectively if you had complicated data structures or unstructured data. In addition, people sometimes used proprietary toolkits to write data on a parallel file system, thus making portability an issue. With MPI-2, you now had a set of functions that are portable (as long as the MPI implementation was compliant with MPI-2 or at the very least MPI-IO), high performance, and easy to use for complicated data structures or unstructured data. Being part of the MPI standard did not hurt things since most parallel applications used MPI. Now you have a portable way to do IO for MPI codes.

The MPI-2 committee was also very kind to the storage vendors. They created a method where you could pass "hints" to the underlying file system or MPI layer to take advantage of any special features of the storage. If you built your application for one storage platform and used hints, you will have to change it for another storage platform. This change is usually just a few lines of code (less than 10 in general). So portability has not been adversely affected and you get to take advantage of the storage.

Finally, one enabling technology that I think people forget about, are the file systems themselves. Clusters need a file system that at the very least can be used by all nodes in the cluster. This capability allows parallel applications to access the same data set as all of the other nodes. But, just as important as having a common file system for all of the nodes is that the file system should be standard and if at all possible, part of the distribution itself.

If a file system is a standard and part of the OS, then it becomes possible for the hardware of various vendors to work together. This combination includes the hardware of the node and the network. So if I take hardware from vendor X and hardware from vendor Y and plug them into a network, then they should be able to communicate. This is pretty much a given in today's world. But, equally important is the ability for the hardware from vendor X and Y to share data (files). This situation means you can shop for the best price, or the best set of features, or the best support, or any other criteria, and the hardware/OS combination should be able to share data.

The only true distributed file system standard today is NFS. NFS was and is one of the enabling technologies for clusters because it allows different hardware/OS combinations to share data because it is a standard. In fact a huge number of vendors of hardware and software get together at Connectathon every year to test their interoperability, particularly for NFS. Without NFS we probably would have a difficult time with IO for clusters since we would have multiple implementations that would be vendor dependent.

However, you don't have to use NFS for data sharing in a cluster, however. As long as you use hardware and software in a cluster that all of the nodes have in common, you can use a proprietary file system. This situation does restrict you in some cases to a single vendor. But it also means that you can take advantage of a high performance file system in your cluster. What many of the vendors of proprietary cluster file systems have done is to also provide NFS gateways so you can at least get to the file system using a standard file system such as NFS.

However, if you read further in this article, you will see that there is about to be a new file system standard that is specifically designed for parallel systems (i.e. clusters).

A Balanced Storage System

One would think that storage for clusters would be a simple task. You just slap some disks together with a RAID controller, use something like NFS, and you're off to the races. You can do this, but it's not what anyone would consider a well thought out design. What qualifies as a well thought out design? In my opinion, it's what I call a "balanced" storage system.

A balanced storage system just means that the storage hardware can keep up with the amount of data from the clients and that the clients are capable of providing enough data to almost max out the storage hardware. For example, let's assume that you have 4 storage nodes that have enough disk in them to sustain about 200 MB/s in disk performance. This means that you have an aggregate of 800 MB/s in disk performance. Let's assume that the clients are connected to the storage system using GigE and a single GigE connection can achieve about 80 MB/s in IO performance.

800 MB/s / 80 MB/s = 10This number means you need, 10 clients to be able to provide enough data to keep the disk subsystem busy.

Why is achieving balance so important? I'm glad you asked. Let's return to the previous example. If you have more than 10 clients, then they are providing more than 800 MB/s of data to the storage system (if they are performing IO at the same time). So the storage hardware becomes a bottleneck and you will never get more than 800 MB/s in aggregate IO performance. This trade off may be acceptable to you, but you have introduced a bottleneck.

On the other hand, if you have fewer than 10 clients, then they cannot provide enough data to keep the storage hardware busy. For example, if you only had 6 clients, then they would provide no more than 600 MB/s in data to the storage hardware. So, you would have more disk hardware performance than you actually need. In other words, you have spent more money that you needed to keep up with the IO demands.So we can say that the storage solution is unbalanced.

To make sure you have a balanced file system you have to do some work. For example, one thing you will need to know, is the IO performance of the disk hardware. Then you will need to know how the clients are connected to the storage hardware and test that connection to determine how much data they can push onto the storage hardware. But, one of the most important things you need to determine is how much IO your applications will consume/produce.

If your applications produce a great deal of data, then it is more likely that you will need a balanced storage system. In other words, IO is a driving factor in performance and a balanced system allows you to match data requirements to hardware, saving money and taking full advantage of the hardware.

On the other hand, if your applications don't do as much IO and if IO performance is not a big driver for application performance, then you might be able to reduce the storage hardware. Let's look at a quick example.

If your applications only spend about 10% of their time on IO, then it's not as important to have a balanced storage system. The reason is that a majority of the time your applications are computing, not doing IO. If you had a balanced storage system, you would only be matching the peak IO requirements of your application. But even if you had an infinitely fast IO system, you could only improve the application performance by 10%. And we all know how much an infinitely fast storage system would cost. Perhaps it would be better to add a few more nodes with the likely-hood of increasing performance more than 10% (This gets into cluster design where you have to know the scalability of the applications and the network, etc. This is beyond the scope of this article â sorry).

Whether you choose to have a balanced storage system or not is up to you. I personally prefer to have a balanced storage system because I know I'm taking full advantage of my hardware. But if my applications aren't doing too much IO, then I will cut back on my storage hardware since even really fast IO won't have much impact on the overall performance. Then I can buy a few more nodes.

Data Corruption - Coming to a Storage System Near You!

Everyone is absolutely paranoid about losing data and rightfully so. I don't want my collection of "KC and the Sunshine Band" MP3's to disappear. So we use RAID devices to create RAID groups that can tolerate the loss of a disk or two without losing data. But, I hate to tell you that the assumption that RAID will protect our data may not be true in the future. Let me explain why.

Today we have 1 TB disks and soon we will have 1.5 TB drives and even 2 TB drives (Maybe I can add in my collection of "Surf Punks" MP3's). A 1 TB drive has about 2 billion (2 x 10^9) sectors. The drive manufacturers claim that an Unrecoverable Read Error (URE) happens about every 1 x 10^14 bits. This converts to 24 x 10^9 sectors (assuming that we have 512 byte sectors or 512 * 8 = 4096 bits per sector).

If you divide the URE by the number of bytes per disk,

24 x 10^9 / 2 x 10 ^9 = 12So if you have 12, 1TB disks, your probability of hitting a URE is one (it is going to happen).

So if you have a RAID-5 group of thirteen 1TB disks and you lose a disk the RAID array starts to reconstruct by reading all of the sectors on the remaining 12 drives. In this case RAID is on a block-level so all the blocks on the remaining drives have to be read even if they donât contain any data. Therefore you are almost guaranteed that during reconstruction you will hit a URE, causing the reconstruction to fail. This means that you will have to restore the RAID group from a backup. Restoring the backup involves having to copy up to restore up to 13TB. This could take quite a while.

RAID-6 was designed to help with this since you can lose up to 2 disks before compromising the ability to reconstruct the data. So if you have a RAID-6 group of 13 1TB disks and you lose a disk, reconstruction starts on the remaining 12 drives. But as shown previously, the probability of hitting a URE with 12, 1TB drives is nearly 1. If you lose another drive during reconstruction you are now down to 11 drives. While the probability of hitting a URE isnât 1 itâs very close. If you lose another drive during reconstruction with the 11 drives, your RAID group is toast and you have to restore from a backup.

Not many people realize that if you have enough 1TB disks in a RAID group, you are almost guaranteed that you will hit a URE at some point. So creating large RAID groups for your MP3 or YouTube collection is not a good idea.

So what do you do? There are a few things you can do:

- Put fewer disks in the RAID group (keep the total below 10-12 TB)

- Run a RAID-1 across RAID groups

- Hope the disk vendors change the URE rate (or ask them)

- Switch to a storage scheme that doesn't require a RAID controller and RAID groups (such as object storage)

Let's talk about these options because some of them are better than others.

You can put fewer disks in a RAID group to keep the total usable space below 10-12 TB. This limits the amount of storage in a single RAID group which may or may not be a problem for you. But, if you are using NFS and these are NAS boxes, then you might end up with a whole bunch of NFS file systems being exported. This makes management a mess. So this option, while it works, is probably not the best for cluster storage.

Another option is to create a RAID group with as many drives and space as you want, but then use RAID-1 to mirror the group. So you would create a RAID-51 or a RAID-61 using this approach. In either case, you will be wasting 50% of your usable space. Then if one of the RAID groups loses a drive and then hits a URE, then you can copy the data over from one side of the RAID-1 to the affected one (presumably after the disk(s) have been restored). But the gotcha is that during this the copy, you are reading all sectors from the unaffected RAID-group. This means that you're probability of hitting a URE is 100%. So this approach, while seemingly a good one, is actually not a good idea either. But it does give you a little more protection since a RAID-51 can tolerate the loss of 2 drives, 1 on each RAID-5 group, and RAID-61 can tolerate the lose of 4 drives, 2 on each RAID-6 group, before you have to restore data from a backup.

On the other hand, if you can create more than 2 RAID groups in a RAID-1, such as using software RAID, you could copy part of the sectors from one unaffected RAID group and another part from the other unaffected RAID group. Again, not a stellar idea. So using the RAID-1 approach again says once again that you want to use fewer than 10-12 TB in a single RAID group.

The third option, hoping the disk vendors change the URE rate, is something that may or may not work. From what I understand, the disk vendors are capable of making disks with much smaller URE rates. But the resulting drives would be smaller in capacity and more expensive (if they're smaller you wouldn't likely hit a URE so you wouldn't even need a lower URE rate). Conversely the drive manufacturers can also make larger drives with a higher URE rate (i.e. more likely to hit a URE). But this too might not be a good idea for obvious reasons. Plus cost is always an issue. You can put pressure on the drive manufacturers, but I'm not sure they are able to respond (most definitely not able to respond quickly).

The last option is switching to a storage scheme that doesn't require a RAID controller and RAID groups. There aren't many file systems that can do this. The only one I know of is Panasas. Since Panasas is using an object based file system and a per-file RAID layout, they don't have to use RAID controllers and RAID groups. We will have more on this topic in Part Three.

I like morals. The moral of this section is, "If you're walking on egg shells, don't hop" (my apologies to Blue Thunder). More precisely, we could say, "Watch the size of your RAID group versus the URE rate." If you put too much data (too many sectors) in a single RAID group, you are almost guaranteed to encounter a URE if the group performs a RAID reconstruction. This has implications for how you architect storage as well. If you use a file system that has IO nodes (e.g. GPFS, IBRIX, Lustre), then you will have to use more IO nodes since of the limitation of the RAID group size.

Enough scary bedtime cluster stories (but you have been warned). Let's move onto the file system themselves.

File System Introduction and Taxonomy

To make things a little bit easier I have broken file systems for clusters into two groups: Distributed File Systems, and Parallel File Systems. The difference between the file systems is that parallel file systems are exactly that, "parallel". They can utilize multiple data servers and the clients can access the data through multiple servers. On the other hand, distributed file systems use a single server for client access to the file system. The client access is not parallel because only a single server is being used, but they can give the user access to a parallel file system.

So, before we jump in and start looking at file systems for clusters, let's do some initial discussion of the data movement from the application down to the hardware. This will allow us to have a good frame of reference and will also allow me to point out differences in file systems. The diagrams you will see below illustrating the data flow are from Marc Unangst and Brent Welch at Panasas.

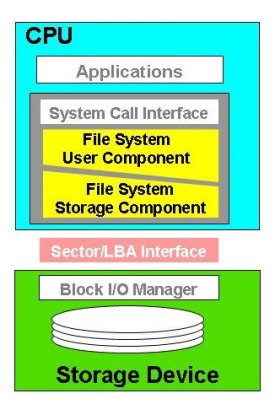

Let's start with Figure One below (courtesy of Marc Unangst and Brent Welch at Panasas). It represents the data flow for a single node with a single storage device.

You can think of this diagram being similar to the OSI model for network protocols. At the top, the operating system performs file system policy checks such as user authorization ("can this user access this file?"), permission checks ("Does the user have read or write access?", "Will the user's quota be exceeded?"), and file level attributes (e.g. time stamps, physical size, etc.).

The file system then translates the data into blocks and they are then written to the storage device (hardware). The OS is responsible for the performance of the storage device because it is responsible for the layout and organization of the storage requests. It is also responsible for the prefetching and buffer caching of the data (this can have a great impact on the overall performance of the data transfer).

I will be referring back to this diagram from time to time to help explain how various file systems differ. At something of a more fundamental level, some file systems use the "block based" approach similar to a local file system (SAN's are examples of this), some use a file based approach (NFS is an example of this), and some are object based (Panasas and Lustre are examples of this).

If you're not as familiar with the structure of file systems, let me take a little bit of space to give a very high-level view of a classic, typical file system, particularly Linux/UNIX file systems. In general file systems break data into two parts - the metadata which is data about the data, and the data itself. The metadata contains information about the data such as when the file was created, when it was last accessed, any permissions on the data, the size of the file, the storage location or locations on disk, etc. Then you have the data itself. In the case of small files, the metadata can be as large as the data itself (or the data is as small as the metadata depending upon your point of view). For files that you are likely to see on clusters, the data is much larger than the metadata (of course there are always exceptions to this).

But the metadata is extremely important. Anytime the actual data is touched, changed, etc., the metadata has to be updated. So the file system always has to modify the metadata if the data is touched, modified, or in any way changed. As an example, if you do a "ls -l" command, the file system has to examine the metadata for each file in the directory to get the current information for the file or files. This can be a source of a great bottleneck in file system performance, particularly for distributed file systems, because they have to read the metadata and return it to the client. If you do this with wildcards or for a directory with a great number of files, then it can take a long time.

So given the simple concept of a file system, and a way to examine how a file system gets data from a client to a file system and back, let's go forth and examine some file systems. As I mentioned before, I'm going to divide the file systems into two classes, distributed file systems and parallel file systems.

Distributed File Systems

The first set of file systems I want to discuss are what I call distributed file systems. These are file systems that are network based (i.e. the actual storage hardware is not necessarily on the storage nodes) but is not necessarily parallel (i.e. there may not be multiple servers that are delivering the file system). For distributed file systems this means that the client will get and put data through a single server. I think you will recognize some of the names of the distributed file systems.

NFS

NFS has been the primary file system protocol for clusters because it is "there" and is pretty much "plug and play" on most *nix systems. It was the first widespread file system that allowed distributed systems to share data effectively. In fact, it's the only standard network file system. Consequently, it can be viewed as one of the enabling technologies for HPC clusters (i.e. getting user directories onto the nodes was trivial).

While NFS is likely to be the most ubiquitous cluster file system, it has gotten a little long in the tooth, so to say, and has some limitations. For example, it doesn't scale well for large clusters and has limited performance. It also used to have some security issues, but these were addressed in Version 4 of the NFS protocol. Despite these limitations, it remains the most popular cluster file system because:

- It comes with virtually every OS (it can even be an add-on to Windows)

- Easy to configure and manage

- Well understood

- Works across multiple platforms

- Usually requires little administration (until it goes down)

- It just works

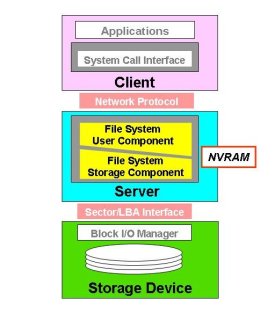

NFS is a fairly easy protocol to follow. All information, data and metadata, flows through the file server. This is commonly referred to as an "in-band" data flow model shown in Figure Two below.

Notice that the file server touches and manages all data and metadata. This model does make things a bit easier to configure and monitor. Moreover, it has narrow, well defined failure modes. Some drawbacks include an obvious bottleneck for performance, has problems with load balancing (more on that later in the article), and security is a function of the server node and not the protocol (this situation means that security features can be all over the map).

With NFS, at least one server "exports" some storage space to the nodes of the cluster. These nodes mount the exported file system(s). When a file request is made to one of these mounted file systems, the mount daemon transfers the request to the NFS server, which then accesses the file on the local file system. The data is the transferred from the NFS server to the requesting node, typically using TCP. Notice that NFS is "file" based. That is, when a data request is made, it is done on a file, not blocks of data or a byte range. So we say that NFS is a file based protocol.

Figure Three below presents NFS using the data flow model introduced in Figure One.

If you compare this diagram with Figure One (the local storage stack), you will notice a few things. First, between the system call interface and the file system user component is a network protocol (usually TCP). This separation is fundamental to a distributed file system where the storage is not necessarily in the node itself. Second, there is usually some NVRAM (Non-Volatile Random Access Memory) in the server that is used to buffer IO.

History of NFS:

NFS is a standard protocol originally developed by Sun Microsystems in 1984 as a distributed file system that allows a system to access files over a network. It is built using a client-server architecture, where a NFS server exports some storage to various clients. The clients mount the storage as though it were a local file system and can then read and write to the space (it is possible to mount the file system as read-only so the clients can only read the data but not write to it). But it does lack some important features of true parallel file systems.

The very first version of NFS was NFS Version 1 (NFSv1), was an experimental version only used internally at Sun. NFS Version 2 (NFSv2) was released in approximately 1989 and originally operated entirely over UDP. The designers chose NFS to be a stateless protocol with other features such as locking to be implemented outside of the protocol. In this context stateless means that each request is independent of all others and that the NFS server is not required to maintain any information about a client, a file, or request stream between requests. The reason the server was chosen to be stateless was to make the implementation much easier.

In the late 1980's the University of Berkeley developed a freely distributable but compatible version of NFSv2. This lead to many OS's becoming NFS compatible so different machines could share a common storage pool. There were some incremental developments of NFSv2 to improve performance. One of these was to add support for TCP to NFSv2.

The Version 3 specification (NFSv3) was released around 1995. It added some new features to keep up with modern hardware, but still did not address the stateless feature nor address some of the security issues that had developed over time. However, NFSv3 added support for 64-bit file sizes and offsets to handle files larger than 4 gigabytes (GB), added support for asynchronous writes on the server to improve write performance, and added TCP as a transport layer. These are three major features that users of NFS had been asking to be added for some time. NFSv3 was quickly adopted and put into production and is probably the most popular version of NFS in use today.

However, even NFSv3 has some problems. For example it does not have what is usually termed "strict cache coherency". This means that when a change is made to a file, that change is not immediately visible to all other clients. In NFS, this is because there is no message from the server to the other clients to notify them that the file has changed (recall that the NFSv3 server is stateless so it keeps no information outside of the single request so it canât notify other clients that the data has changed). This can have a great impact on writing to the same file. In other words - it can be dangerous. But NFS does not prohibit you from doing this so we can say that NFS has loose coherency.

However, even with loose coherency, two clients can still write to the same file without having problems, as long as their writes don't overlap and don't share pages. Not sharing pages is usually the most problematic aspect, because the necessary alignment depends on the page size of both machines, and there is no way for client A to find the page size of client B. Page-sharing causes problems because many NFS clients (including Linux) do their reads and writes in units of whole VM pages (4K on x86 and x86-64, 16K on IA-64). This means that if the application writes data from 0-2K in a 4K page, the NFS client will read the whole 4K page from the server, apply the new data to the first half of the page, and then write out the whole 4K page. This process is usually referred to as read-modify-write.

This is what leads to the "last writer wins" scenario. Consider the case where, at the same time client A is writing from 0-2K in the page, client B reads the same page from the server, modifies the other half of the page (offset 2K-4K), and then writes back the whole page to the server. Since the server sees two 0-4K writes for the same range in the file, the page will contain either [AAAold] or [oldBBB], depending on whether A or B wrote last, but not [AAABBB] as it should.

The other issue with NFSv3 is that the client is not required to write back modified data immediately to the server. It is only required to write data when the file is closed. This is what leads to the "open-to-close" cache consistency ascribed to NFS. If client A writes to a file, and client B subsequently reads from the modified range, it is not guaranteed to see the new data until client A closes the file. If code writers and users don't understand this, they can get unexpected results.

Around 2003, Version 4 of NFS (NFSv4) was released with some improvements. In particular, it added some speed improvements (who doesn't like speed), strong security (with the ability for multiple security protocols), and most important, NFSv4 finally became a stateful protocol (for the most part). Some server state was added, primarily in the form of delegations and file locks. Using file locks requires that the server track the state. File locks are the main item that requires the server to track state. In addition to the lock state itself, the server has to know about, and track, open files from the client's point of view, to enforce Win32 "share mode" locks. The server is not required to keep this state persistently (i.e., a server restart is allowed to destroy state about open files), and there is mechanism in the protocol that allows the client to detect when the state has been lost and recover it.

NFSv4.0 added strong security to NFS focusing on authentication, integrity, and privacy. To do this and allow for multiple security implementations, a new interface called RPCSEC GSSAPI was developed. It allows for authentication plug-ins to be created for protocols like NFS that use RPC (Remote Procedure Call). An example of one of the plug-ins is Kerberos 5.

NFSv4.0 also has an extensible architecture to allow easier evolution of the standard. Despite the better security, improved performance, and making NFS a stateful protocol, NFSv4 has not yet seen the widespread adoption that NFSv3 has. It has all of the components of an industrial strength file system but it still lacks performance and scalability. But this could change very soon.

NFS/RDMA

NFS/RDMA,sometimes also called NFSoRDMA (NFS over RDMA) is a binding of NFS on top of RDMA (Remote Direct Memory Access) protocols such as iWarp (RDMA over Ethernet) and InfiniBand. The goal is to improve the network performance of NFS (version 2, 3, and 4) since a higher bandwidth and lower latency interconnect is being used. Using RDMA should allow the following aspects:

- Reduced CPU usage on the client for data transfer

- Zero-copy data transfers

- User-space IO (kernel bypass)

- Reduced latency

- Improved data throughput

Work on NFS/RDMA is on-going for both the Linux kernel and for OpenSolaris. In the Linux 2.6.24 kernel, the NFS/RDMA client was released while the 2.6.25 kernel contained the server portion.

One of the other motivations for developing NFS/RDMA is that many clusters are shipping with a high-speed interconnect such as Myrinet, InfiniBand, or Quadrics. A vast majority of these clusters use a high-speed interconnect for computational traffic and a second network for data traffic (many times just a single GigE line). The high-speed interconnects have very low latency and very high bandwidth, but most cluster applications don't need all of the bandwidth. So there is room to push more data through the network. So why not use the left over bandwidth for data traffic? This also allows two networks to be combined into a single network since you can combine the computational traffic with data traffic. You can actually do that today with "standard" NFS, but you have to wrap TCP packets in IB packets to transfer them over the IB network. This has to be done since NFS only understands UDP and TCP (most people use TCP). For example, with InfiniBand this means that you have to use IPoIB (IP over IB). This limits performance since you have to encode and decode the TCP packets at either end of the network. NFS/RDMA adds the ability to use RDMA protocols to the RPC layer, so you don't have to wrap the TCP packets.

In the case of 10GigE, you could just run "normal" NFS over the NIC, but the of 10GigE will limit NFS performance and increase the load on the client CPU. Since many 10GigE NICs are moving towards using iWARP, NFS/RDMA will allow the highest possible performance for data transfer while reducing the CPU load on the client.

There is a great deal of work went into developing NFS/RDMA since it's an open IETF (Internet Engineering Task Force) standard. A number of people worked on getting NFS/RDMA functioning in Linux. Tom Tucker at Open Grid Computing was one of the leaders in the efforts to get both the NFS client and the NFS server into the Linux kernel. As mentioned earlier, the client when into the 2.6.24 kernel and the server went into the 2.6.25 kernel. You can visit the NFS-RDMA project to get the patches and see what work has been done. Since the NFS-RDMA, are at the RPC layer, then NFSv2, NFSv3, and NFSv4 should just work out of the box.Mellanox demonstrated NFS/RDMA over a 20 Gb/s InfiniBand link from clients to a new Mellanox MTD2000 NFS-RDMA Server Product Development Kit (PDK) that features a RAID-5 back-end storage subsystem with SAS (Serial Attached SCSI) hard disk drives. With two clients connected to the MTD2000 each using a 20 Gb/s IB link and reading from the file server cache, Mellanox was able to achieve 1,400 MB/s of read throughput measured using IOzone. Actual write performance to the disk was measured at about 400 MB/s. Mellanox used an open-source NFS/RDMA server and client code for the demonstration and is compatible with the OpenFabrics Enterprise Distribution (OFED) v. 1.1. This shows the performance potential for running NFS over a high-speed interconnect using appropriate protocols.

But NFS is still limited in that all data traffic from a particular client must go through a single server to access data on the physical media. This creates a bottleneck in performance (all IO goes through one node). People have always wanted more performance and scalability out of NFS (who doesn't like performance?) but have not received it. That is about to change.

pNFS

Currently, a number of vendors are working on version 4.1 of the NFS standard. One of the biggest additions to NFSv4.1 is called pNFS or Parallel NFS. When people first hear about pNFS they sometimes think it is an attempt to kludge parallel file system capabilities into NFS, but this isn't the case. It is really the next step in the evolution of the NFS protocol that is a well planned, tested, and executed approach to adding a true parallel file system capability to the NFS protocol. The goal is to improve the performance and scalability while making the file system a standard (recall that NFS is the only true shared file system standard). Moreover, this standard is designed to be used with file based, block based, and object based storage devices with an eye towards freeing customers from vendor or technology lock-in. The NFSv4.1 draft standard contains a draft specification for pNFS that is being developed and demonstrated now. A number of vendors are working together to develop pNFS. For example,

to name but a few of those involved. Obviously the backing of large vendors means there is a real chance that pNFS will see wide acceptance in a reasonable amount of time.

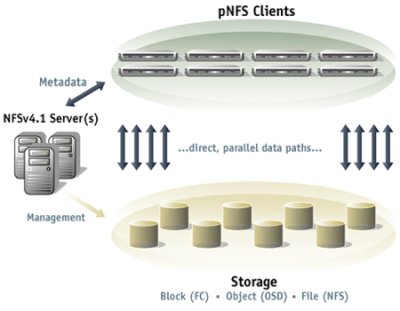

The basic architecture of pNFS looks like the following (from the www.pnfs.com website):

The architecture consists of pNFS clients, NFSv4.1 Metadata server(s), and one or more storage devices. These storage devices can be block based as in the case of Fibre Channel storage, object based as in the case of Panasas or Lustre, or file based in the case of NFS storage devices such as those from Netapp. There is also a network connecting the NFSv4.1 Metadata server(s) with the clients and the storage devices as well as network connecting the clients and the storage devices.

The pNFS clients mount the file system in a similar manner to NFSv3 or NFSv4 file systems. When they access a file on the pNFS file system they make a request to one of the NFSv4.1 metadata servers that passes back what is called a layout to the client. A layout is an abstraction that describes details about the file such as permissions, etc, as well as where a file is located on the storage devices and what capabilities the clients have in accessing the storage devices where the file is located (read) or to be located (write). Once the client has the layout, it accesses the data directly on the storage device(s) removing the metadata server from the actual data access process. When the client is done, it sends the layout back to the metadata server in the event any changes were made to the file. The metadata server also acts as the traffic cop in the event that more than one client wants to access a file. It controls permissions on the file and grants capabilities to clients to write or read to the file. This enforces coherency on the file allowing more than one client to read or write to the file at the same time.

If you have one or more storage devices, the clients can access all of them in parallel but only uses the devices where the data is stored or to be stored. This is the parallel portion of pNFS. If you want more speed, you just add more data storage devices (more spindles and more network connections) and make sure the data is spread across the devices. Moving the metadata sever out of the direct line of fire during file operations also improves speed because the bottleneck of the metadata server has been removed once the client is granted permission to access the file and knows where the data is located.

The client needs a layout "driver" so that it can communicate with any one of the three types of storage devices or possibly a combination of the devices at any one time. How the data is actually transmitted between the storage devices and the clients is defined elsewhere. The "control" protocol show in Figure Four between the metadata server and the storage is also defined elsewhere. The fact that the control protocol and the data transfer protocols are defined elsewhere gives great flexibility to the vendors. This allows them to add value to pNFS to improve performance, improve manageability, improve fault tolerance, or any feature they wish to address as long as they follow the NFSv4.1 standard.

If you haven't already noticed one of the really attractive features of pNFS is that it avoids vendor lock-in and technology lock-in. This is in part due to pNFS being a standard (if it is approved) in NFSv4.1. In fact it will be the only parallel file system standard. So vendors who follow the standard should be able to inter-operate, which is what all customers want. So theoretically a system may have a pool of object based storage, file based storage, and block based storage, and have the pNFS clients all access this storage pool. This allows you, the customer, to choose whatever storage you want from whichever vendor you want as long as there are layout drivers for it.

So why should vendors support NFSv4.1? The answer is fairly simple. With NFSv4.1 they can now support multiple OS's without having to port their entire software stack. They only have to write a driver for their hardware. While writing a driver isn't trivial, it is much easier than porting an entire software stack to a new OS.

Parallel NFS is on its way to becoming a standard. It's currently in the prototyping stage and interoperability testing is being performed by the various participants. It is hoped that sometime in late 2007 it will adopted as the new NFS standard and will be available in a number of operating systems. Also, Panasas has announced that they will be releasing key components of their DirectFlow client software to accelerate the adoption of pNFS.

If you would like more information please go to the Panasas website to see a recorded webinar on pNFS. If you want to experiment with pNFS now, the Center for Information Technology Integration (CITI) has some Linux 2.6 kernel patches that use PVFS2 for storage. Finally, Panasas has created a website to provide documentation, links, and hopefully soon, some code for pNFS.

That is all for part one. Coming Next: Part Two: NAS, AoE, iSCSI, and more!

I want to thank Marc Ungangst, Brent Welch, and Garth Gibson at Panasas for their help in understanding the complex world of cluster file systems. While I haven't even come close to achieving the understanding that they have, I'm much better than I when I started. This article, as attempt to summarize the world of cluster file systems, is the result of many discussions between where they answered many, many questions from me. I want to thank them for their help and their patience.

I also hope this series of articles, despite their length, has given you some good general information about file systems and even storage hardware. And to borrow some parting comments, "Be well, Do Good Work, and Stay in Touch."

A much shorter version of this article was originally published in ClusterWorld Magazine. It has been greatly updated and formatted for the web. If you want to read more about HPC clusters and Linux you may wish to visit Linux Magazine.

Dr. Jeff Layton hopes to someday have a 20 TB file system in his home computer. He lives in the Atlanta area and can sometimes be found lounging at the nearby Fry's, dreaming of hardware and drinking coffee (but never during working hours).

© Copyright 2008, Jeffrey B. Layton. All rights reserved.

This article is copyrighted by Jeffrey B. Layton. Permission to use any

part of the article or the entire article must be obtained in writing

from Jeffrey B. Layton.