Real application performance

InfiniBand is a proven interconnect for clustered server solutions, and one of the leading connectivity solution for high-performance computing. InfiniBand was designed as a general I/O and in practice provides low-latency and the highest link speed.Computational Fluid Dynamics (CFD) is one of the branches of fluid mechanics that uses numerical methods and algorithms to solve and analyze problems that involve fluid flows. ANSYS/FLUENT is a leading commercial software provider for solving fluid flow problems. The broad physical modeling capabilities of FLUENT have been applied to industrial applications ranging from air flow over an aircraft wing to combustion in a furnace, from bubble columns to glass production, from blood flow to semiconductor manufacturing, from clean room design to wastewater treatment plants. The ability of the software to model in-cylinder engines, aero acoustics, turbo machinery, and multiphase systems has served to broaden its reach. At the core of any CFD calculation is a computational grid, used to divide the solution domain into thousands or millions of elements where the problem variables are computed and stored. In FLUENT, unstructured grid technology is used, which means that the grid can consist of elements in a variety of shapes: quadrilaterals and triangles for 2D simulations, and hexahedral, tetrahedral, prisms, and pyramids for 3D simulations. These elements form an interlocking network throughout the volume where the fluid flow analysis takes place.

The performance of a CFD code depends on several factors, including size and topology of the mesh, physical models, numerics and parallelization, compilers and optimization, in addition to performance characteristics of the hardware where the simulation is performed. FLUENT provides a set of benchmark problems which represent typical current usage and covering a wide range of mesh sizes and physical models. The problems selected represent a range of simulations typical of those which might be found in industry. The principal objective of this benchmark suite is to provide comprehensive and fair comparative information of the performance of FLUENT on available hardware platforms.

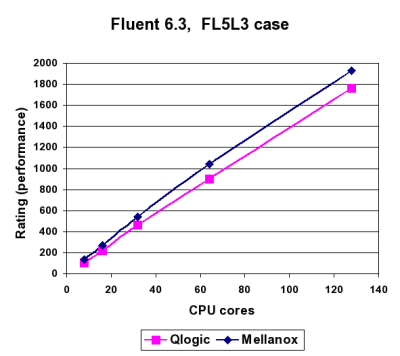

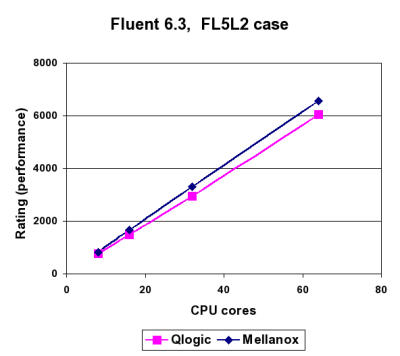

The following charts compares Mellanox InfiniBand and QLogic InfiniPath interconnects on the same platform â dual core, dual socket, Intel Xeon 3GHz 5100 series (code name Woodcrest) servers, using FLUENT benchmarks. When testing real world applications, the entire architecture makes the difference. The Mellanox architecture is a full transport-offload one, with hardware capabilities of RDMA, while QLogic is a full âon-loadingâ architecture.

In Fluent FL5L3 benchmark, a Turbulent flow of air through a duct is computed. The cross-sectional planes of the duct transition from a circle at the inlet to a rectangle at the outflow boundary. The Reynolds-Stress Model is used for computing turbulence (number of cells: 9,792,512, cell type hexahedral, models RSM turbulence, solver segregated implicit).

In Fluent FL5L3 benchmark, a Turbulent flow of air through a duct is computed. The cross-sectional planes of the duct transition from a circle at the inlet to a rectangle at the outflow boundary. The Reynolds-Stress Model is used for computing turbulence (number of cells: 9,792,512, cell type hexahedral, models RSM turbulence, solver segregated implicit).

FLUENT FL5L2 benchmark represents the computation of the exterior flow field around a simplified model of a passenger sedan. The simulation geometry was used for the Japan External Aerodynamics competition. A viscous-hybrid grid with prismatic cells is used to adequately model the boundary layer regions (number of cells 3,618,080, cell type hybrid, models k-epsilon turbulence, solver segregated implicit).

FLUENT FL5L2 benchmark represents the computation of the exterior flow field around a simplified model of a passenger sedan. The simulation geometry was used for the Japan External Aerodynamics competition. A viscous-hybrid grid with prismatic cells is used to adequately model the boundary layer regions (number of cells 3,618,080, cell type hybrid, models k-epsilon turbulence, solver segregated implicit).

Choosing the right interconnect

In both cases of FLUENT benchmarks, Mellanox InfiniBand shows higher performance and better super-linear scaling comparing to QLogic InfiniPath.FLUENTâs CFD application is a latency-sensitive application, and the results shown here are good examples on how pure latency benchmarks can be misleading when choosing the right interconnect. In order to determine the systemâs performance, one should take into consideration the entire interconnect architecture (such as off-loading versus on-loading) and the ability of scaling, rather than just single points of data.

In order to provide better applications sight, Mellanox has created the Mellanox Cluster Center. The Mellanox Cluster Center offers an environment for developing, testing, benchmarking and optimizing products based on InfiniBand technology. The center, located in Santa Clara, California, provides on-site technical support and enables secure sessions onsite or remotely. More details can be achieved through Mellanox web site.

Note: You can download a pdf version of this article.

Another article, Cluster Interconnects: Single Points of Performance and Optimum Connectivity in the Multi-core Environment by Gilad, are also available.

Gilad Shainer is a senior technical marketing manager at Mellanox technologies focusing on high performance computing. He joined Mellanox Technologies in 2001 to develop Mellanox's InfiniHost PCI-X Host Channel Adapter (HCA) device and later led the development of Mellanox's InfiniHost III Ex PCI Express HCA device. Gilad Shainer holds MSc. degree (2001, Cum Laude) and a BSc. degree (1998, Cum Laude) in Electrical Engineering from the Technion Institute of Technology in Israel. He is also a member of the PCISIG PCI-X and PCI Express Working Groups and has contributed to the definition of the PCI-X 2.0 specifications. Copyright © 2006 Mellanox, Inc.