A Deep Dive Exploring The Portland Group's PGI Accelerator Model

The remarkable computation power of General Purpose Graphical Processing Units (GP-GPUs) has led them to steadily gain traction in High Performance Computing (HPC). But creating GP-GPU programs can require new programming methods that often introduce additional work and code revisions, or even re-writes, and frequently become an obstacle to the adoption of GP-GPU technology.

To help solve this problem The Portland Group (PGI) has introduced an elegant way to augment existing HPC applications, allowing them to run efficiently on GP-GPUs while still maintaining their original code structure using standard Fortran or C. This approach provides a rapid and low-investment method for programmers to investigate GP-GPU computing. Recently, The Portland Group announced improved versions of their compiler and development tools that further enhance the PGI Accelerator Model for GP-GPUs.

In simple terms, GP-GPUs are good at solving Single Instruction Multiple Data (SIMD) problems. This class of problem is often referred to as "data parallel," where a single instruction is executed on a large amount of data at the same time. SIMD maps well to video processing, and most importantly, it maps well to many of the mathematical operations used in HPC applications. While GP-GPUs are fast, they are not "stand alone" general-purpose processors and must be coupled with an existing CPU (i.e., a host processor).

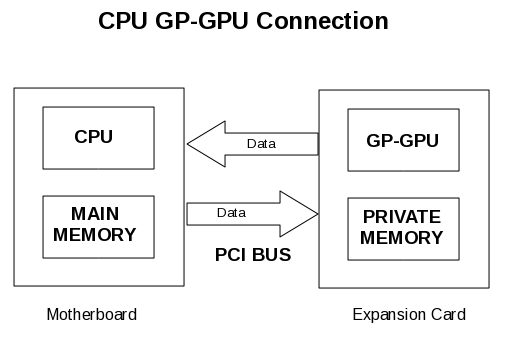

GP-GPUs are co-processors, and thus must be attached via the PCI bus. This situation is shown in the following figure. The data used by the GP-GPU must be moved from the main memory to the private GP-GPU memory over the PCI bus. Even though the PCI Gen2 bus is very fast (4GB/sec for 16x PCIe), it still represents one of the slowest steps in most GP-GPU accelerated applications.

Data movement is often bi-directional (i.e., send an array out to be computed, get a new array back) and various transfers may happen many times during a program execution. Managing this data movement correctly often results in a performance gain for the program.

Programming Options

There are two popular programming languages for GP-GPUs. The first is the NVIDIA CUDA language. CUDA, or the Compute Unified Device Architecture, is designed to work on NVIDIA GP-GPUs and multi-core x86 systems. CUDA is similar to ANSI C, but does not support recursion. The other language is OpenCL, which was developed by Apple Computer and is a standard low-level API for GP-GPU andmulti-core hardware. It is based on the ANSI C language, and like CUDA, adds extensions to support parallel operations. Both NVIDIA and AMD/ATI have pledged support for the OpenCL standard, which supports both data parallel (GP-GPUs) and task parallel (multi-core) processing.

Both CUDA and OpenCL require a user to manage data flow to and from the host CPU. For example, CUDA requires users to use the cudaMemcpycommand to move data to and from the GP-GPU. In addition, both CUDA and OpenCL often require substantial code changes that include explicit memory allocation and GP-GPU kernel invocations. Indeed, existing programs often grow in size (more lines of code) when using OpenCL or CUDA. Thus, users are often faced with maintaining two versions of their applications, a âCPUâ version and a âGPUâ version. In general, both CUDA and OpenCL, while useful tools, are often considered low-level and somewhat hardware-specific.

Working At A Higher Level: The PGI Accelerator Programming Model

Most programmers would rather have a compiler manage all of the low-level details of the GP-GPU accelerator. In fact, automatic usage of the GP-GPU would be ideal. Given that most HPC codes are written in Fortran and C (and some in C++), there is no way for the language to tell the compiler where to run code (CPU or GP-GPU) or what data should be sent to and from the GP-GPU. One solution to this problem is the use of "comment" directives, or pragmas, which allow a user to provide hints and guidance to the compiler. The OpenMP specification is an example of this approach, which has worked well with multi-core threaded applications. A similar approach for GP-GPU programming has been developed by The Portland Group and is called the PGI Accelerator Programming Model. This model allows existing Fortran and C codes to be augmented with comments so that they can be compiled to use the NVIDIA GP-GPUs for more compute-intensive portions of code. Consider the following Fortran code snippet:

!$acc region

do i = 1,n

r(i) = sin(a(i)) ** 2 + cos(a(i)) ** 2

enddo

!$acc end region

Other than the !$acc ... lines, the rest of the code looks normal. These two comments tell the compiler that this is the beginning of an accelerator region. Thus, these portions of code will be compiled into accelerator kernels (programs that run on the GP-GPU). All necessary data will be copied from the host memory to the GP-GPU memory as required, and results will be copied back. There are some limitations to the accregions (i.e., no nesting, branching into or out of, or side effects), but in a naive sense, that is all that needs to be done in order to use the GP-GPU.

In addition to compute regions, the PGI Accelerator model has further been enhanced with a data region directive. This directive allows more control over how the data is moved between the host and the GP-GPU. For instance, if a continuous data region encloses many compute regions, the compute regions will reuse the data already allocated on the GP-GPU. This directive placement can reduce the amount of data movement and improve performance. It still, however, is limited only to those regions of code that were actually within the data region. That is, it did not account for other acc regions that may live in procedures or in an area that was outside of the data area. To solve this problem PGI's latest release introduced new features that allow for more control over data placement. First, there is the mirror directive. When data is "mirrored" a copy is created on the host as well as on the GP-GPU. They are used separately, and to keep them in sync the updatedirective can be used to synchronize the host/GP-GPU arrays (or any portion thereof).

The reflected directive is used to declare that the actual argument arrays bound to dummy argument arrays must have a visible copy on the GP-GPU at the point at which it is called. Finally, the device declarative clause is used to declare that the variables or arrays in the list are to be allocated only on the GP-GPU, thus removing the need to first create the array on the host and then copy it to the GP-GPU. These new directives are explained using examples in Optimizing Data Movement in the PGI Accelerator Programming Model.

One of the more unique aspects of the new data directives is support for device-resident data. That is, data is now able to remain on the GPU between function calls and kernels. The ability to maintain data on the GPU can substantially reduce data transfers and improve efficiency. From a programming standpoint, this capability allows for many types of general-purpose computations on the GPU that did not used to be practical due to the CPU/GPU data management. For example, resident data can be re-used (processed) by various parts of a program without the need to re-transfer the data.

The new data directives improve the efficiency of the PGI Accelerator model beyond previous versions. Adroit use of the data directives allows the compiler to manage and optimize the painstaking task of moving data to and from the GPU, and thus improves performance without major code revisions or the need to learn a new language.

Going Deeper

One of the difficulties facing users of GP-GPU and co-processor technologies is integration into the traditional HPC cluster environment. Many of the new programming models (CUDA, OpenCL) are somewhat orthogonal to the traditional Message Passing Interface (MPI) cluster models. That is, GPU languages assume a single server (possibly with multiple GP-GPUs) and cannot address multiple nodes. MPI, on the other hand, assumes a collection of distributed memory machines. While hybrid programming approaches are possible, application portability is often lost and may require a non-trivial amount of re-programming.

As presented, the PGI Accelerator Programming Model provides a solution to this problem by working within a familiar HPC programming environment. A powerful set of directives allows existing (and new) Fortran and C programs to use NVIDIA GP-GPUs with a small amount of programming effort. Users may easily start to experiment with simple accdirectives, and then add optimizations for data movement between the host and the GP-GPU.

Finally, it's worth noting that the PGI Accelerator programming model is both complete and compact. Practically speaking, it provides a high-level framework for programming nearly any type of accelerator hardware, including NVIDIA GPUs, AMD APUs, Intel MIC (Many Integrated Cores), FPGAs, IBM Cell, and others. The model currently targets NVIDIA GPUs, but it's a relatively easy step for PGI to extend it to other targets. The high-level PGI model also has two other potentially important advantages for developers: it can preserve portability as hardware continues to evolve, and it can preserve existing x86 code bases.

You can find much more information and articles on the PGI site, including a white paper on PGI Accelerator Programming Model for Fortran & C that has more in-depth information. There are trial versions available so users can easily start evaluating this new technology.